Transparency Report

Transparency Reporting is an important measure of accountability that provides insight about how we are improving safety on Youinroll

Safety Philosophy

Youinroll is a service that was built to encourage users to express themselves and entertain one another, but we also want our community to be, and feel, safe. Our Community Guidelines attempt to balance these important needs, by allowing users to express themselves but without saying or doing anything that is harmful to others (or illegal). Our goal is to foster a community that supports and sustains creators, provides a welcoming and entertaining environment for viewers, and eliminates illegal, negative and harmful interactions.

At Youinroll , we believe everyone in our community - creators, viewers and Youinroll - plays a role in promoting the safety and health of our community. Through the Community Guidelines, we try to make clear what expression and behavior are allowed on the service, and what is not. We then rely on community action and reporting, along with technological solutions, to ensure the Community Guidelines are upheld. Creators and moderators also use tools that we and third-parties provide to enforce Youinroll service-wide standards, or to set higher standards in their own channels.

Youinroll is a service that was built to encourage users to express themselves and entertain one another, but we also want our community to be, and feel, safe. Our Community Guidelines attempt to balance these important needs, by allowing users to express themselves but without saying or doing anything that is harmful to others (or illegal). Our goal is to foster a community that supports and sustains creators, provides a welcoming and entertaining environment for viewers, and eliminates illegal, negative and harmful interactions.

At Youinroll , we believe everyone in our community - creators, viewers and Youinroll - plays a role in promoting the safety and health of our community. Through the Community Guidelines, we try to make clear what expression and behavior are allowed on the service, and what is not. We then rely on community action and reporting, along with technological solutions, to ensure the Community Guidelines are upheld. Creators and moderators also use tools that we and third-parties provide to enforce Youinroll service-wide standards, or to set higher standards in their own channels.

Our Approach to Safety

Youinroll is a live-streaming service. The vast majority of the content that appears on Youinroll is gone the moment it’s created and seen. That fact requires us to think about safety and community health in different ways than other services that are primarily based on pre-recorded and uploaded content. Content moderation solutions that work for uploaded, video-based services do not work, or work differently, on Youinroll Through experimentation and investment, we have learned that for Youinroll , user safety is best protected, and most scalable, when we employ a range of tools and processes, and when we partner with, and empower, our community members.

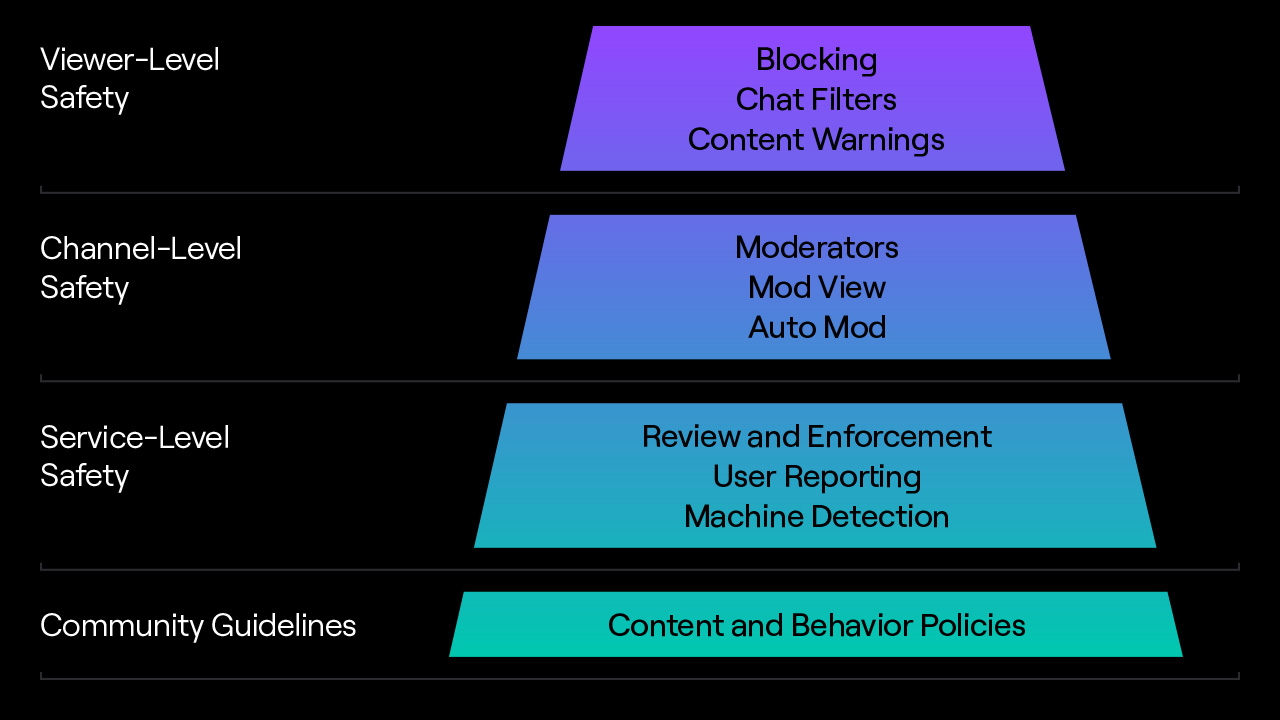

The result is a layered approach to safety - one that combines the efforts of both Youinroll (through tooling and staffing) and members of the community, working together. It starts with our Community Guidelines, which balance user expression with community safety, and set expectations for the behavior we want on Youinroll . Creators are expected to uphold these service-wide standards in their channels, and are invited to raise the bar if they choose. We provide creators with tools to set, communicate and enforce the standards of behavior in their channel. We also provide viewer-level controls that enable viewers to control the content they see. At the same time, Youinroll applies various technologies to proactively detect and remove certain kinds of harmful content before users ever encounter it. Finally, we empower users to report harmful or inappropriate behavior to Youinroll . These reports are reviewed and acted on by a team of skilled and trained professionals who can apply service-wide enforcement actions.

We will discuss each of these pieces in detail (from bottom of the pyramid to top) and how they fit together in the following section.

Youinroll is a live-streaming service. The vast majority of the content that appears on Youinroll is gone the moment it’s created and seen. That fact requires us to think about safety and community health in different ways than other services that are primarily based on pre-recorded and uploaded content. Content moderation solutions that work for uploaded, video-based services do not work, or work differently, on Youinroll Through experimentation and investment, we have learned that for Youinroll , user safety is best protected, and most scalable, when we employ a range of tools and processes, and when we partner with, and empower, our community members.

The result is a layered approach to safety - one that combines the efforts of both Youinroll (through tooling and staffing) and members of the community, working together. It starts with our Community Guidelines, which balance user expression with community safety, and set expectations for the behavior we want on Youinroll . Creators are expected to uphold these service-wide standards in their channels, and are invited to raise the bar if they choose. We provide creators with tools to set, communicate and enforce the standards of behavior in their channel. We also provide viewer-level controls that enable viewers to control the content they see. At the same time, Youinroll applies various technologies to proactively detect and remove certain kinds of harmful content before users ever encounter it. Finally, we empower users to report harmful or inappropriate behavior to Youinroll . These reports are reviewed and acted on by a team of skilled and trained professionals who can apply service-wide enforcement actions.

We will discuss each of these pieces in detail (from bottom of the pyramid to top) and how they fit together in the following section.

Community Guidelines

Youinroll Community Guidelines are the foundation of our safety ecosystem. These guidelines set the guardrails for all user generated content and activity on the service. Because the Community Guidelines communicate the expectations for behavior on Youinroll , clarity is important - over time we have continued to try to increase clarity by adding descriptions and specific examples of prohibited behavior and content (and specific exceptions) wherever possible. We also recognize that Youinroll community culture is constantly changing - which leads us to continuously review and evolve our Community Guidelines. We believe that by communicating our expectations and clearly communicating updates, Youinroll users will understand the boundaries we have set, and feel free and confident in expressing themselves within those boundaries. We also believe that clear, relevant Community Guidelines are critical to establishing consistency in our enforcement actions.

Youinroll Community Guidelines are the foundation of our safety ecosystem. These guidelines set the guardrails for all user generated content and activity on the service. Because the Community Guidelines communicate the expectations for behavior on Youinroll , clarity is important - over time we have continued to try to increase clarity by adding descriptions and specific examples of prohibited behavior and content (and specific exceptions) wherever possible. We also recognize that Youinroll community culture is constantly changing - which leads us to continuously review and evolve our Community Guidelines. We believe that by communicating our expectations and clearly communicating updates, Youinroll users will understand the boundaries we have set, and feel free and confident in expressing themselves within those boundaries. We also believe that clear, relevant Community Guidelines are critical to establishing consistency in our enforcement actions.

Service Level Safety

Service-level safety consists of technology and operations used to uphold the Community Guidelines across Youinroll . Service-level safety consists of three parts: machine detection, user reporting and review and enforcement.

Machine Detection: Over the last two years, we have implemented technologies that scan content on the service and then flag it for review by human specialists (we call this “machine detection”). Examples of this are nudity, sexual content, gore and extreme violence. Youinroll is predominantly a live-streaming service, and most of the content that is streamed is not recorded or uploaded. Because it’s ephemeral, live-streaming provides a particularly challenging environment for machine detection. Nevertheless, we have found ways to make machine detection viable and useful on Youinroll , and we will continue to invest in these technologies to improve them.

User Reporting: Community reports are a crucial part of maintaining the safety and trust of our community and upholding our Community Guidelines. We believe user reporting is particularly effective on Youinroll because the vast majority of the content on Youinroll - video and chat - is public. We encourage creators, mods, and viewers to report content that violates our Community Guidelines so we can take appropriate action on a service-wide basis. User reports are sent to our team of content moderation professionals to review.

Review and Enforcement: At Youinroll , we have a group of highly trained and experienced professionals who review user reports and content that is flagged by our machine detection tools. These content moderation professionals work across multiple locations, and support over 20 languages, in order to provide 24/7/365 capacity to review reports as they come in across the globe. Reports are prioritized so that the most harmful behavior can be dealt with most quickly. Review time for any given report is dependent on a number of factors including the severity of the report, the availability of evidence to support the report, and the current volume of the report queue. We also employ a team of experienced investigators to delve into the most egregious reports, and liaise with law enforcement as necessary.

We recognize that our content moderation professionals spend a lot of time reviewing a wide range of content that can be negative or disturbing, and we take their health and safety as seriously as we take the health and safety of the Youinroll community. To fulfill this commitment, we have invested in tooling to reduce the harmful effects of certain content on reviewers - for example, by having the system our reviewers use automatically show potentially harmful videos in black-and-white, at lower resolution, and/or muted. We also prioritize programs and benefits for our reviewers to protect their mental well-being, such as extensive access to dedicated counsellors and the structure of workflows, workplaces and processes that promote employee wellness by design.

Service-level safety consists of technology and operations used to uphold the Community Guidelines across Youinroll . Service-level safety consists of three parts: machine detection, user reporting and review and enforcement.

Machine Detection: Over the last two years, we have implemented technologies that scan content on the service and then flag it for review by human specialists (we call this “machine detection”). Examples of this are nudity, sexual content, gore and extreme violence. Youinroll is predominantly a live-streaming service, and most of the content that is streamed is not recorded or uploaded. Because it’s ephemeral, live-streaming provides a particularly challenging environment for machine detection. Nevertheless, we have found ways to make machine detection viable and useful on Youinroll , and we will continue to invest in these technologies to improve them.

User Reporting: Community reports are a crucial part of maintaining the safety and trust of our community and upholding our Community Guidelines. We believe user reporting is particularly effective on Youinroll because the vast majority of the content on Youinroll - video and chat - is public. We encourage creators, mods, and viewers to report content that violates our Community Guidelines so we can take appropriate action on a service-wide basis. User reports are sent to our team of content moderation professionals to review.

Review and Enforcement: At Youinroll , we have a group of highly trained and experienced professionals who review user reports and content that is flagged by our machine detection tools. These content moderation professionals work across multiple locations, and support over 20 languages, in order to provide 24/7/365 capacity to review reports as they come in across the globe. Reports are prioritized so that the most harmful behavior can be dealt with most quickly. Review time for any given report is dependent on a number of factors including the severity of the report, the availability of evidence to support the report, and the current volume of the report queue. We also employ a team of experienced investigators to delve into the most egregious reports, and liaise with law enforcement as necessary.

We recognize that our content moderation professionals spend a lot of time reviewing a wide range of content that can be negative or disturbing, and we take their health and safety as seriously as we take the health and safety of the Youinroll community. To fulfill this commitment, we have invested in tooling to reduce the harmful effects of certain content on reviewers - for example, by having the system our reviewers use automatically show potentially harmful videos in black-and-white, at lower resolution, and/or muted. We also prioritize programs and benefits for our reviewers to protect their mental well-being, such as extensive access to dedicated counsellors and the structure of workflows, workplaces and processes that promote employee wellness by design.

Channel-Level Safety

On Youinroll , we believe safety should also be personal. We enable our creators to set their own standards of acceptable and unacceptable community behavior, as long as these standards also comply with our Community Guidelines. To foster a culture of accountability, creators can build a team of community moderators (colloquially known as “mods”), who moderate chat in the creator’s channel. (Mods can be easily identified in chat by the green sword icon that appears next to their username.) Moderators play many roles, from welcoming new viewers to the channel, to answering questions, to modeling and enforcing channel-level standards. We provide both creators and their mods with a powerful suite of tools such as AutoMod, Chat Modes, and Mod View to make their roles as easy and intuitive as possible. These tools provide the ability to automatically filter chat, allow creators and mods to see (and delete) questionable chat messages before they are displayed on the channel, give users “time outs” (lock them out of chat for a period of time) or permanently block them from the channel.

On Youinroll , we believe safety should also be personal. We enable our creators to set their own standards of acceptable and unacceptable community behavior, as long as these standards also comply with our Community Guidelines. To foster a culture of accountability, creators can build a team of community moderators (colloquially known as “mods”), who moderate chat in the creator’s channel. (Mods can be easily identified in chat by the green sword icon that appears next to their username.) Moderators play many roles, from welcoming new viewers to the channel, to answering questions, to modeling and enforcing channel-level standards. We provide both creators and their mods with a powerful suite of tools such as AutoMod, Chat Modes, and Mod View to make their roles as easy and intuitive as possible. These tools provide the ability to automatically filter chat, allow creators and mods to see (and delete) questionable chat messages before they are displayed on the channel, give users “time outs” (lock them out of chat for a period of time) or permanently block them from the channel.

Viewer-Level Safety

In addition to the tools that we provide creators and their mods, we also want viewers to be able to customize the safety of their experience. To enable that, we provide viewers with features - such as mature flags, chat filters, and blocking other users - that they can use to customize content and interactions they encounter across the service.

In addition to the tools that we provide creators and their mods, we also want viewers to be able to customize the safety of their experience. To enable that, we provide viewers with features - such as mature flags, chat filters, and blocking other users - that they can use to customize content and interactions they encounter across the service.

H1 2021 Safety Updates

Community Guidelines: In H1 2021, we made two updates to our Community Guidelines aimed at reducing the risk of severe harms to our community. These new policies address (1) harms that occur off of Youinroll , and (2) the risk of severe harms that may occur from influential individuals’ use of Youinroll :

Off-Service Misconduct: launched in April 2021, our Off-Service Conduct Policy addresses serious offenses that pose a substantial physical safety risk to the Youinroll community; such as extreme or deadly violence, acts of terrorism, leadership or membership in a known hate group, sexual assault, the sexual exploitation of minors, and other acts or credible threats that endanger members of the Youinroll community or Youinroll staff, even if these offenses occur entirely off Youinroll .

Investigating off-service offenses is complex and involves balancing important interests of safety and privacy. To ensure we were equipped to investigate these cases thoroughly, impartially and fairly, we have made a substantial investment in our investigations team. We increased the size of our team, which enhanced our internal enforcement response capabilities, and partnered with an outside law firm that specializes in investigations.

Incitement to Violence: In May 2021, we introduced a policy that allows us to preemptively suspend accounts when we believe an individual’s use of Youinroll poses a high likelihood of inciting real world violence.

We made both of these updates to better protect our communities on and off Youinroll . We are constantly re-assessing our policies and processes, and evaluating the need for new policies, with the aim of reducing harm on Youinroll and making it a place where healthy communities can thrive.

Operational Capacity: We are committed to ensuring that user safety reports are reviewed as quickly and thoroughly as possible, and we have continued to invest heavily in increasing our capacity to achieve this goal.

In our inaugural Transparency Report (covering H2 2020) we reported a 4x increase in the number of content moderation professionals available to respond to user reports. In H1 2021 we continued to increase our capacity to keep pace with growth in usage of the Youinroll service. First, we invested in the teams responsible for deep-dive investigations for complex cases, reviewing suspension appeals, proactive content review, and our quality assurance program. Finally, from October 2020 to June 2021, we have quadrupled the capacity of our Law Enforcement Response team (LER), which focuses on harms against children, counter-terrorism, law enforcement interactions, and serious off-service violations.

Community Guidelines: In H1 2021, we made two updates to our Community Guidelines aimed at reducing the risk of severe harms to our community. These new policies address (1) harms that occur off of Youinroll , and (2) the risk of severe harms that may occur from influential individuals’ use of Youinroll :

Off-Service Misconduct: launched in April 2021, our Off-Service Conduct Policy addresses serious offenses that pose a substantial physical safety risk to the Youinroll community; such as extreme or deadly violence, acts of terrorism, leadership or membership in a known hate group, sexual assault, the sexual exploitation of minors, and other acts or credible threats that endanger members of the Youinroll community or Youinroll staff, even if these offenses occur entirely off Youinroll .

Investigating off-service offenses is complex and involves balancing important interests of safety and privacy. To ensure we were equipped to investigate these cases thoroughly, impartially and fairly, we have made a substantial investment in our investigations team. We increased the size of our team, which enhanced our internal enforcement response capabilities, and partnered with an outside law firm that specializes in investigations.

Incitement to Violence: In May 2021, we introduced a policy that allows us to preemptively suspend accounts when we believe an individual’s use of Youinroll poses a high likelihood of inciting real world violence.

We made both of these updates to better protect our communities on and off Youinroll . We are constantly re-assessing our policies and processes, and evaluating the need for new policies, with the aim of reducing harm on Youinroll and making it a place where healthy communities can thrive.

Operational Capacity: We are committed to ensuring that user safety reports are reviewed as quickly and thoroughly as possible, and we have continued to invest heavily in increasing our capacity to achieve this goal.

In our inaugural Transparency Report (covering H2 2020) we reported a 4x increase in the number of content moderation professionals available to respond to user reports. In H1 2021 we continued to increase our capacity to keep pace with growth in usage of the Youinroll service. First, we invested in the teams responsible for deep-dive investigations for complex cases, reviewing suspension appeals, proactive content review, and our quality assurance program. Finally, from October 2020 to June 2021, we have quadrupled the capacity of our Law Enforcement Response team (LER), which focuses on harms against children, counter-terrorism, law enforcement interactions, and serious off-service violations.

Moderation in Channels: Coverage, Removals and Enforcements

Overview

On Youinroll , we empower creators to build communities that are unique and personal, but paired with that is the expectation that those communities must be healthy and abide by the Youinroll Community Guidelines. To accomplish this, many Youinroll creators ask trusted members of their communities to help moderate chat in the creator’s channel. These channel moderators (“mods”) and moderation tools are the foundation of chat moderation in every creator’s Youinroll channel. To make this model work, we invest heavily to provide our creators and their mods with tools that are flexible and powerful enough to enforce those community standards within their channel. We focus our moderator support tooling in two main areas: identifying potentially harmful content for moderator review, and scaling moderator controls to support fast-moving Youinroll chat.

Creators and their mods can use tooling provided by Youinroll to manage who can chat in their channel and what content can be seen in chat. To manage who is actively participating in their community, creators and their mods can remove bad actors from chat by issuing temporary and/or permanent bans - these bans delete a chatter’s recent messages from the channel, and prevent them from sending further messages in the channel during the time they are suspended. Creators and mods can also change certain settings to restrict who can chat to more trusted groups such as followers or subscribers only. Mods can send their own chat messages, which carry their green Moderator badge, to guide the tone of the chat. To control what messages can be seen in chat, creators and mods utilize two core features: AutoMod and Blocked Terms. When enabled, AutoMod pre-screens chat messages in 17 languages and holds messages that contain content detected as risky, preventing them from being visible on the channel unless they are approved by a mod. Blocked Terms allow creators to further tailor AutoMod by adding custom terms or phrases that will be always blocked in their channel. These features are best utilized through Mod View, a customizable channel interface that provides mods with a toolkit of ‘widgets’ for moderation tasks like reviewing messages held by AutoMod, keeping tabs on actions taken by other mods on their team, changing moderation settings, and more.

It’s important to remember that actions taken by a creator and their moderator(s) can only affect a user’s access in that channel. Channel bans, time-outs and chat deletion only apply within a channel, and do not affect the user’s access to other channels or other parts of the Youinroll service. However, creators and moderators (or any Youinroll user) can report conduct that violates our Community Guidelines through the Youinroll reporting tool, which can then be actioned on a service-wide basis by Youinroll moderation staff.

The following sections provide additional information regarding how creators and their moderators set and enforce standards for the chat in their own channels.

On Youinroll , we empower creators to build communities that are unique and personal, but paired with that is the expectation that those communities must be healthy and abide by the Youinroll Community Guidelines. To accomplish this, many Youinroll creators ask trusted members of their communities to help moderate chat in the creator’s channel. These channel moderators (“mods”) and moderation tools are the foundation of chat moderation in every creator’s Youinroll channel. To make this model work, we invest heavily to provide our creators and their mods with tools that are flexible and powerful enough to enforce those community standards within their channel. We focus our moderator support tooling in two main areas: identifying potentially harmful content for moderator review, and scaling moderator controls to support fast-moving Youinroll chat.

Creators and their mods can use tooling provided by Youinroll to manage who can chat in their channel and what content can be seen in chat. To manage who is actively participating in their community, creators and their mods can remove bad actors from chat by issuing temporary and/or permanent bans - these bans delete a chatter’s recent messages from the channel, and prevent them from sending further messages in the channel during the time they are suspended. Creators and mods can also change certain settings to restrict who can chat to more trusted groups such as followers or subscribers only. Mods can send their own chat messages, which carry their green Moderator badge, to guide the tone of the chat. To control what messages can be seen in chat, creators and mods utilize two core features: AutoMod and Blocked Terms. When enabled, AutoMod pre-screens chat messages in 17 languages and holds messages that contain content detected as risky, preventing them from being visible on the channel unless they are approved by a mod. Blocked Terms allow creators to further tailor AutoMod by adding custom terms or phrases that will be always blocked in their channel. These features are best utilized through Mod View, a customizable channel interface that provides mods with a toolkit of ‘widgets’ for moderation tasks like reviewing messages held by AutoMod, keeping tabs on actions taken by other mods on their team, changing moderation settings, and more.

It’s important to remember that actions taken by a creator and their moderator(s) can only affect a user’s access in that channel. Channel bans, time-outs and chat deletion only apply within a channel, and do not affect the user’s access to other channels or other parts of the Youinroll service. However, creators and moderators (or any Youinroll user) can report conduct that violates our Community Guidelines through the Youinroll reporting tool, which can then be actioned on a service-wide basis by Youinroll moderation staff.

The following sections provide additional information regarding how creators and their moderators set and enforce standards for the chat in their own channels.

Moderation of Chat

The overwhelming majority of user interaction on Youinroll occurs in channels that are moderated by channel moderators, AutoMod, or both.

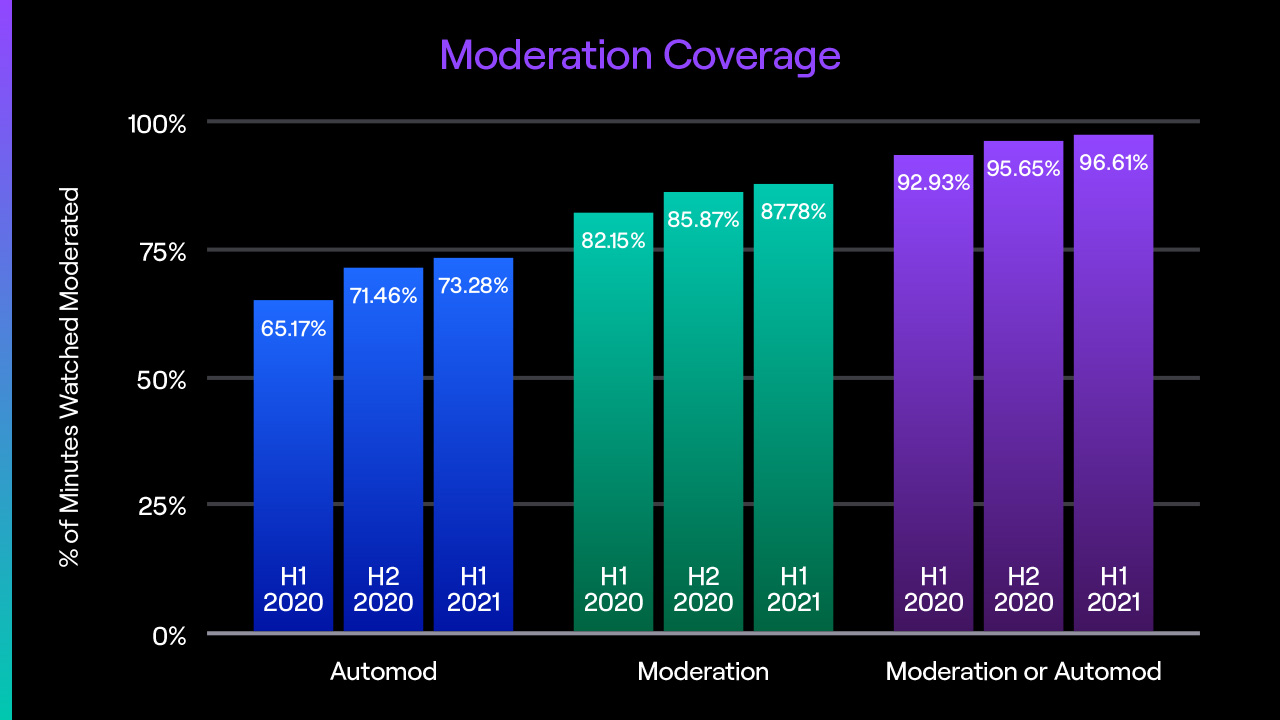

In the past year, human moderators, and streamers using Youinroll -provided Youinroll -provided channel moderation tools (such as AutoMod), have been making more streams safer. From H2 2020 to H1 2021, Automod coverage, measured as the portion of live minutes watched occurring on channels that have Youinroll AutoMod feature actively monitoring chat, increased from 71.5% to 73.28%. We believe this increase in moderation coverage is attributable to the increase in new channels over this period, which have AutoMod enabled by default. Moderation coverage, by human moderators and/or third-party moderation bots, measured as the fraction of live minutes watched on channels which had moderation actions, increased from 85.9% to 87.8%. This is likely the result of an increase in viewership of large channels, which are more likely to have active human moderation and/or third-party moderation bots due to fast-paced chat. Combined, total moderation coverage (either AutoMod or active moderators) increased from 95.7% to 96.6%. This increase in coverage shows that moderation has scaled with channel growth on Youinroll

.

The overwhelming majority of user interaction on Youinroll occurs in channels that are moderated by channel moderators, AutoMod, or both.

In the past year, human moderators, and streamers using Youinroll -provided Youinroll -provided channel moderation tools (such as AutoMod), have been making more streams safer. From H2 2020 to H1 2021, Automod coverage, measured as the portion of live minutes watched occurring on channels that have Youinroll AutoMod feature actively monitoring chat, increased from 71.5% to 73.28%. We believe this increase in moderation coverage is attributable to the increase in new channels over this period, which have AutoMod enabled by default. Moderation coverage, by human moderators and/or third-party moderation bots, measured as the fraction of live minutes watched on channels which had moderation actions, increased from 85.9% to 87.8%. This is likely the result of an increase in viewership of large channels, which are more likely to have active human moderation and/or third-party moderation bots due to fast-paced chat. Combined, total moderation coverage (either AutoMod or active moderators) increased from 95.7% to 96.6%. This increase in coverage shows that moderation has scaled with channel growth on Youinroll

.

Proactive and Manual Removals of Chat Messages

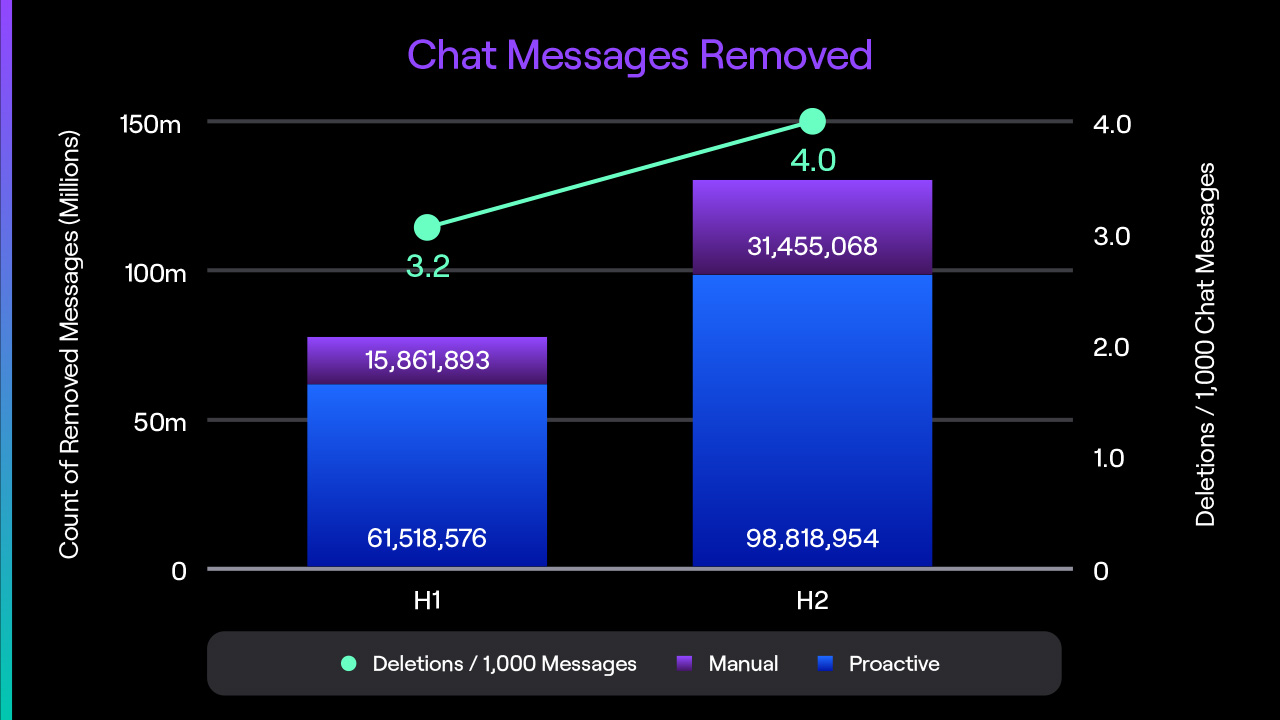

The vast majority of content removals on Youinroll are removals of chat messages by channel moderators (either manually or with the help of proactive channel moderation tools) within individual channels. Youinroll provides tools such as customizable Blocked Terms and AutoMod (described in more detail above), which allow channels to apply filters that proactively screen messages out of chat before they are seen. Channel moderators can also actively monitor chat and delete harmful or disruptive messages within seconds after they are posted.

In H2 2020, 32.6 billion chat messages were sent on Youinroll , and in H1 2021, that number increased to 37.5 billion (a 15% increase). From H2 2020 to H1 2021, the number of messages proactively removed, through tools such as Automod, blocked terms, and chat modes, increased from 98.8M to 132.4M (a 34% increase). Manual removal of messages by moderators increased from 31.5M to 37.1M (an 18% increase). Deletions per thousand messages sent increased from 4.0 to 4.5 (a 13% increase). A portion of this increase in chat message deletion reflects both improvements to Automod, which enabled streamers and moderators to remove more harmful messages, as well as increased human moderation coverage across the service.

The vast majority of content removals on Youinroll are removals of chat messages by channel moderators (either manually or with the help of proactive channel moderation tools) within individual channels. Youinroll provides tools such as customizable Blocked Terms and AutoMod (described in more detail above), which allow channels to apply filters that proactively screen messages out of chat before they are seen. Channel moderators can also actively monitor chat and delete harmful or disruptive messages within seconds after they are posted.

In H2 2020, 32.6 billion chat messages were sent on Youinroll , and in H1 2021, that number increased to 37.5 billion (a 15% increase). From H2 2020 to H1 2021, the number of messages proactively removed, through tools such as Automod, blocked terms, and chat modes, increased from 98.8M to 132.4M (a 34% increase). Manual removal of messages by moderators increased from 31.5M to 37.1M (an 18% increase). Deletions per thousand messages sent increased from 4.0 to 4.5 (a 13% increase). A portion of this increase in chat message deletion reflects both improvements to Automod, which enabled streamers and moderators to remove more harmful messages, as well as increased human moderation coverage across the service.

Channel Enforcement Actions

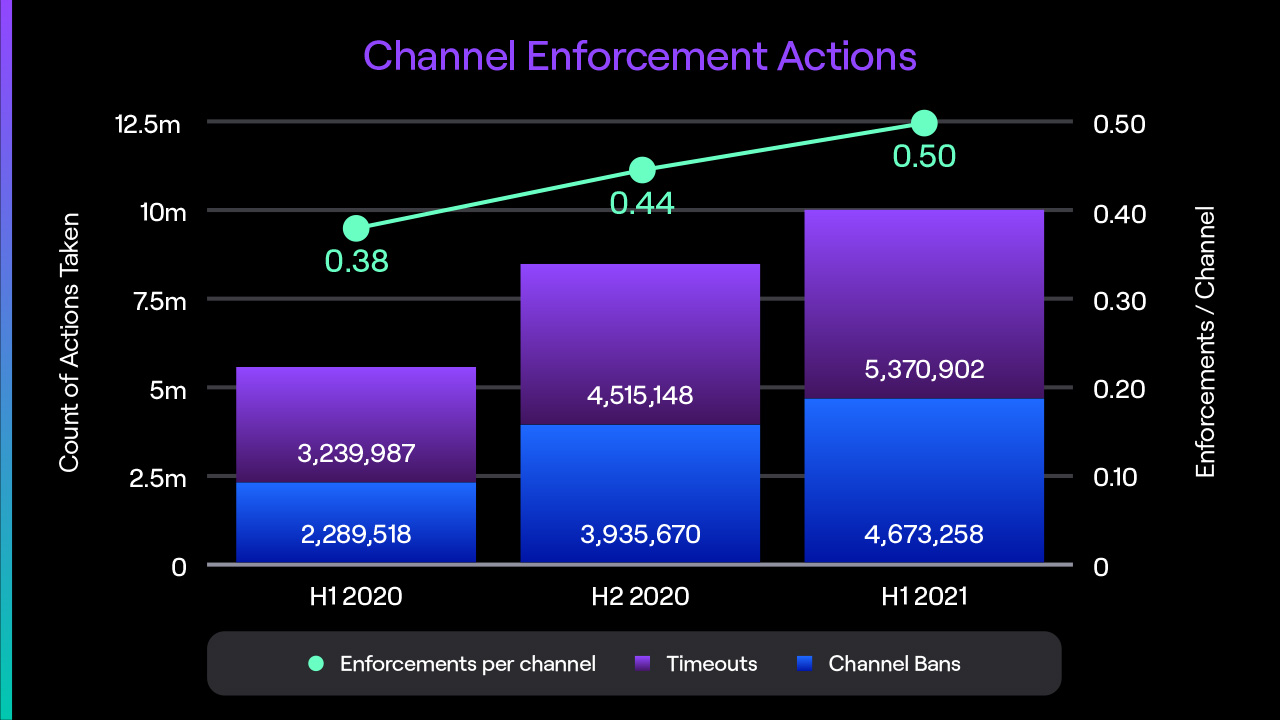

In addition to deleting messages, channel moderators can choose to remove harmful and disruptive users from a channel, either using a timeout for a customizable length of time, or an outright ban, to prevent any future harm that a disruptive user might cause in the channel. Banned users may submit an appeal, called an Unban Request, should they wish to return to the channel. Unban decisions are at the discretion of the channel owner and their moderators.

Across the service, channel-level bans increased from 3.9M to 4.7M (a 19% increase) from H2 2020 to H1 2021. Timeouts, or temporary suspensions, increased from 4.5M to 5.4M (also a 19% increase). The average number of enforcements per channel increased from 0.44 to 0.50 (a 12% increase). We believe this increase in channel-level suspensions is the result of increased moderation coverage in channels (both active moderation and use of Youinroll channel moderation tools).

In addition to deleting messages, channel moderators can choose to remove harmful and disruptive users from a channel, either using a timeout for a customizable length of time, or an outright ban, to prevent any future harm that a disruptive user might cause in the channel. Banned users may submit an appeal, called an Unban Request, should they wish to return to the channel. Unban decisions are at the discretion of the channel owner and their moderators.

Across the service, channel-level bans increased from 3.9M to 4.7M (a 19% increase) from H2 2020 to H1 2021. Timeouts, or temporary suspensions, increased from 4.5M to 5.4M (also a 19% increase). The average number of enforcements per channel increased from 0.44 to 0.50 (a 12% increase). We believe this increase in channel-level suspensions is the result of increased moderation coverage in channels (both active moderation and use of Youinroll channel moderation tools).

Reports and Enforcements

It’s worth noting again that Youinroll is a live-streaming service, thus the vast majority of the content on Youinroll is ephemeral. For this reason, we do not consider content removal as the primary means of enforcing streamer adherence to our Community Guidelines. Content is flagged by either machine detection or via user-submitted reports, and our team of content moderation professionals is responsible for reviewing these reports and issuing the appropriate enforcements for verified violations. The type of enforcement issued is based on a number of factors and can range from a warning, to a timed suspension, to an indefinite suspension. If there is recorded content associated with the violation, such as a recorded video (VOD) or a clip, that content is removed. That said, most enforcements do not require content removal, given that apart from the report, there is no longer a record of the violation — the live, violative content is already gone. For this reason, we believe the most appropriate measure of our safety efforts is the total number of enforcements issued, and that is how we have oriented the following sections of this report.

For clarity, please note that the statistics regarding enforcements in the following sections does not include, and are not duplicative of, the channel-level enforcements discussed in the previous section.

It’s worth noting again that Youinroll is a live-streaming service, thus the vast majority of the content on Youinroll is ephemeral. For this reason, we do not consider content removal as the primary means of enforcing streamer adherence to our Community Guidelines. Content is flagged by either machine detection or via user-submitted reports, and our team of content moderation professionals is responsible for reviewing these reports and issuing the appropriate enforcements for verified violations. The type of enforcement issued is based on a number of factors and can range from a warning, to a timed suspension, to an indefinite suspension. If there is recorded content associated with the violation, such as a recorded video (VOD) or a clip, that content is removed. That said, most enforcements do not require content removal, given that apart from the report, there is no longer a record of the violation — the live, violative content is already gone. For this reason, we believe the most appropriate measure of our safety efforts is the total number of enforcements issued, and that is how we have oriented the following sections of this report.

For clarity, please note that the statistics regarding enforcements in the following sections does not include, and are not duplicative of, the channel-level enforcements discussed in the previous section.

Reports Made on Youinroll

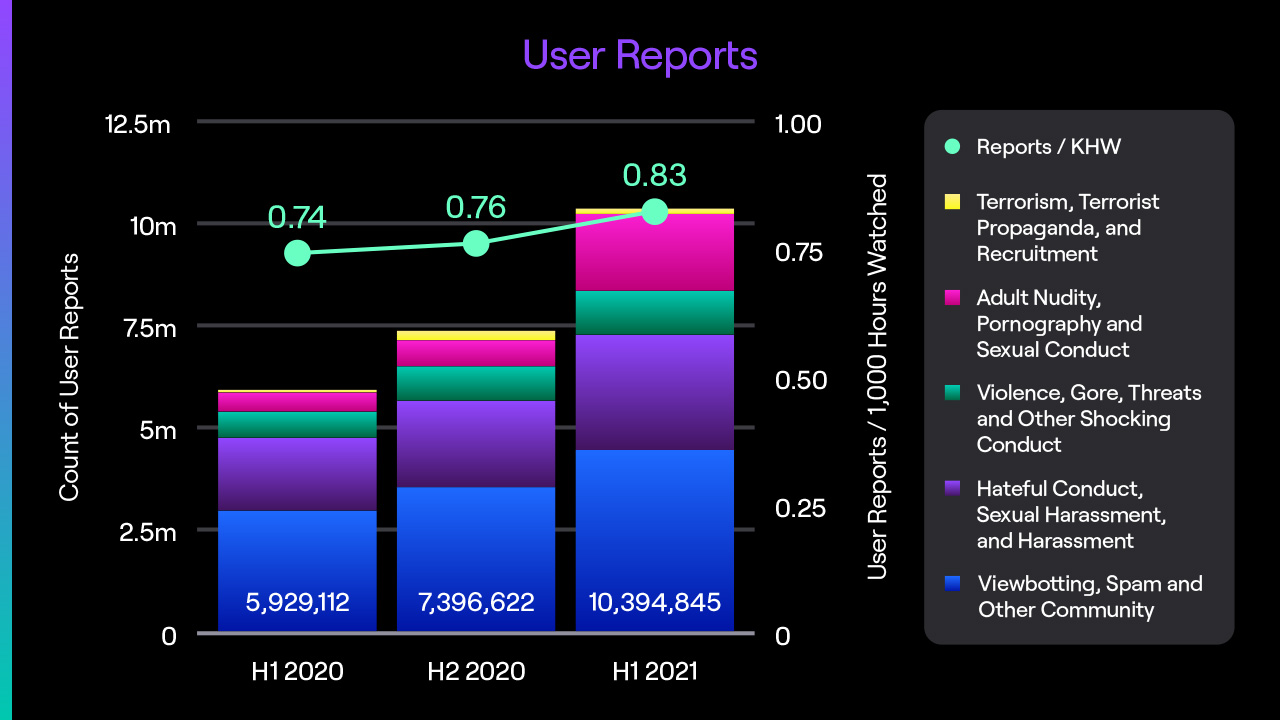

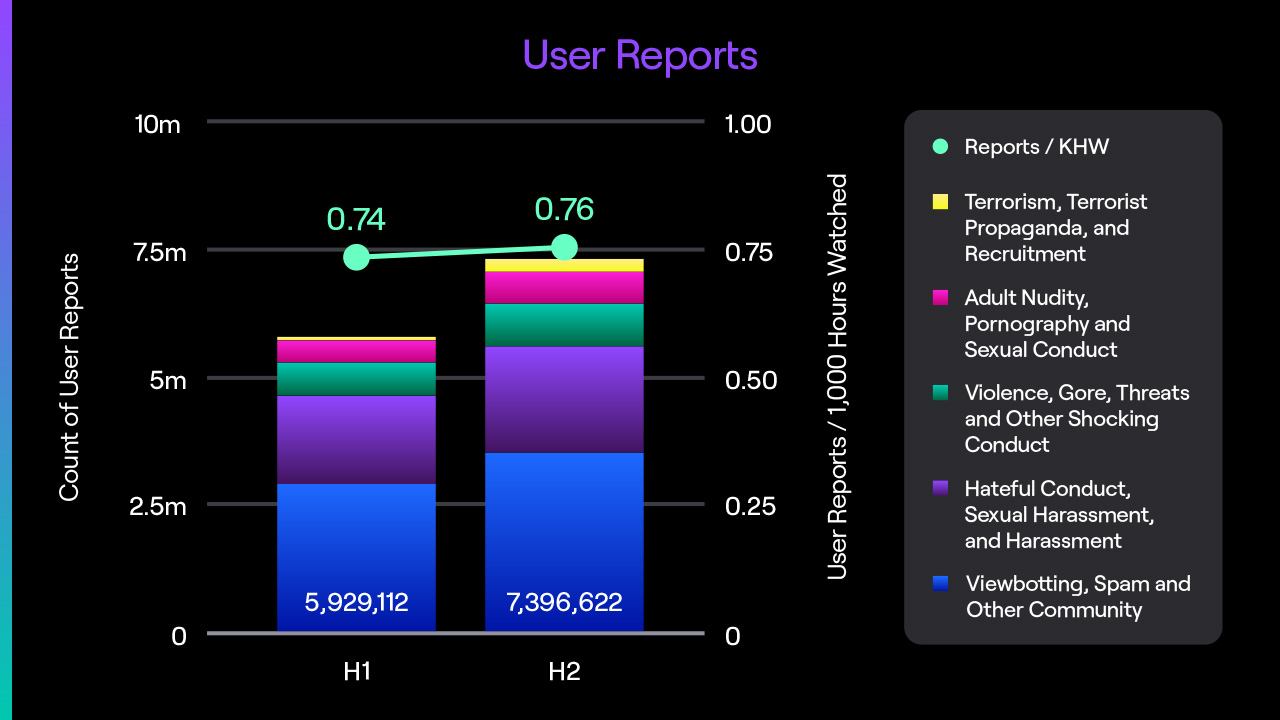

From H2 2020 to H1 2021, the total number of reports submitted by users increased from 7.4M to 10.4M (a 41% increase). When evaluating user report volume, we use Reports per Thousand Hours Watched (reports/KHW) because it shows whether reporting volumes are increasing or decreasing relative to the overall growth of Youinroll usage. Reports per KHW increased from 0.76 to 0.79 (an 8% increase). While we saw increases in report volumes across multiple report reasons, most report volumes were in line with the 30% growth of Youinroll usage between H2 2020 and H1 2021. The exception was reports of sexual content, which increased at a much higher rate, leading to an overall increase of reports/KHW.

Over the course of H1 2021, we saw an increase in sexual content reports associated with the emergence of indoor hot tub streams, commonly referred to as “Hot Tub Meta.” In May we addressed this topic with the community. Under our current Nudity & Attire and Sexually Suggestive Content policies, streamers may appear in swimwear in contextually appropriate situations (at the beach, or in a hot tub, for example), but nudity or sexually explicit content (which we define as pornography, sex acts, and sexual services) are not allowed on Youinroll . Our Sexually Suggestive Content policy seeks to prohibit content that is overtly or explicitly sexually suggestive, but does not go as far as to prohibit all content that could be viewed as sexually suggestive as this would significantly restrict other content that we believe is acceptable for the Youinroll community. Many viewers reported streams focused on swimwear-clad streamers in hot tubs because they believed that this content is sexually suggestive to an extent that violates our policies. In some cases, the same content and streamers were reported hundreds of times through duplicative reports by the same reporter, or coordinated reporting “brigades”. In the overwhelming majority of cases, the content and behavior cited in these reports did not violate our Community Guidelines. For additional information related to enforcements in this category, see “Adult Nudity, Pornography and Sexual Conduct” below.

From H2 2020 to H1 2021, the total number of reports submitted by users increased from 7.4M to 10.4M (a 41% increase). When evaluating user report volume, we use Reports per Thousand Hours Watched (reports/KHW) because it shows whether reporting volumes are increasing or decreasing relative to the overall growth of Youinroll usage. Reports per KHW increased from 0.76 to 0.79 (an 8% increase). While we saw increases in report volumes across multiple report reasons, most report volumes were in line with the 30% growth of Youinroll usage between H2 2020 and H1 2021. The exception was reports of sexual content, which increased at a much higher rate, leading to an overall increase of reports/KHW.

Over the course of H1 2021, we saw an increase in sexual content reports associated with the emergence of indoor hot tub streams, commonly referred to as “Hot Tub Meta.” In May we addressed this topic with the community. Under our current Nudity & Attire and Sexually Suggestive Content policies, streamers may appear in swimwear in contextually appropriate situations (at the beach, or in a hot tub, for example), but nudity or sexually explicit content (which we define as pornography, sex acts, and sexual services) are not allowed on Youinroll . Our Sexually Suggestive Content policy seeks to prohibit content that is overtly or explicitly sexually suggestive, but does not go as far as to prohibit all content that could be viewed as sexually suggestive as this would significantly restrict other content that we believe is acceptable for the Youinroll community. Many viewers reported streams focused on swimwear-clad streamers in hot tubs because they believed that this content is sexually suggestive to an extent that violates our policies. In some cases, the same content and streamers were reported hundreds of times through duplicative reports by the same reporter, or coordinated reporting “brigades”. In the overwhelming majority of cases, the content and behavior cited in these reports did not violate our Community Guidelines. For additional information related to enforcements in this category, see “Adult Nudity, Pornography and Sexual Conduct” below.

Enforcements

User reports are prioritized based on a number of factors, including the classification and severity of the reported behavior, and whether or not the behavior constitutes an illegal act. Each report, regardless of priority, is sent to our content moderation team for review. If the reviewer agrees that the report demonstrates a violation of the Community Guidelines, the reviewer will issue an enforcement action against the violator’s account. The enforcement issued depends on the nature of the violation, and can range from a warning, a temporary suspension (1-30 days), or for the most serious offenses, an indefinite suspension from Youinroll . If any content that contains the violation has been recorded on our service, we will remove it.

We also maintain an appeals process, so that if a user believes an enforcement is incorrect, unwarranted or unfair, they can appeal the enforcement. Appeals are managed by a separate group of specialists within our content moderation team. For more information on account enforcement, see our Account Enforcements page.

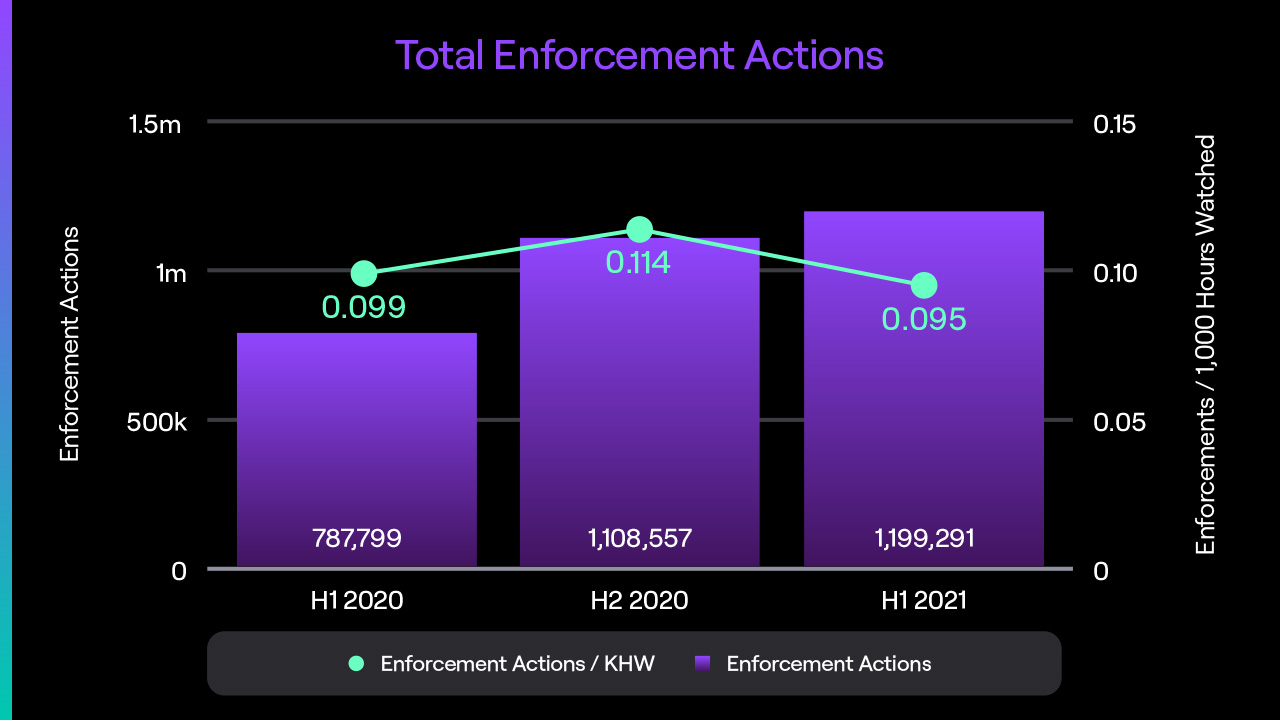

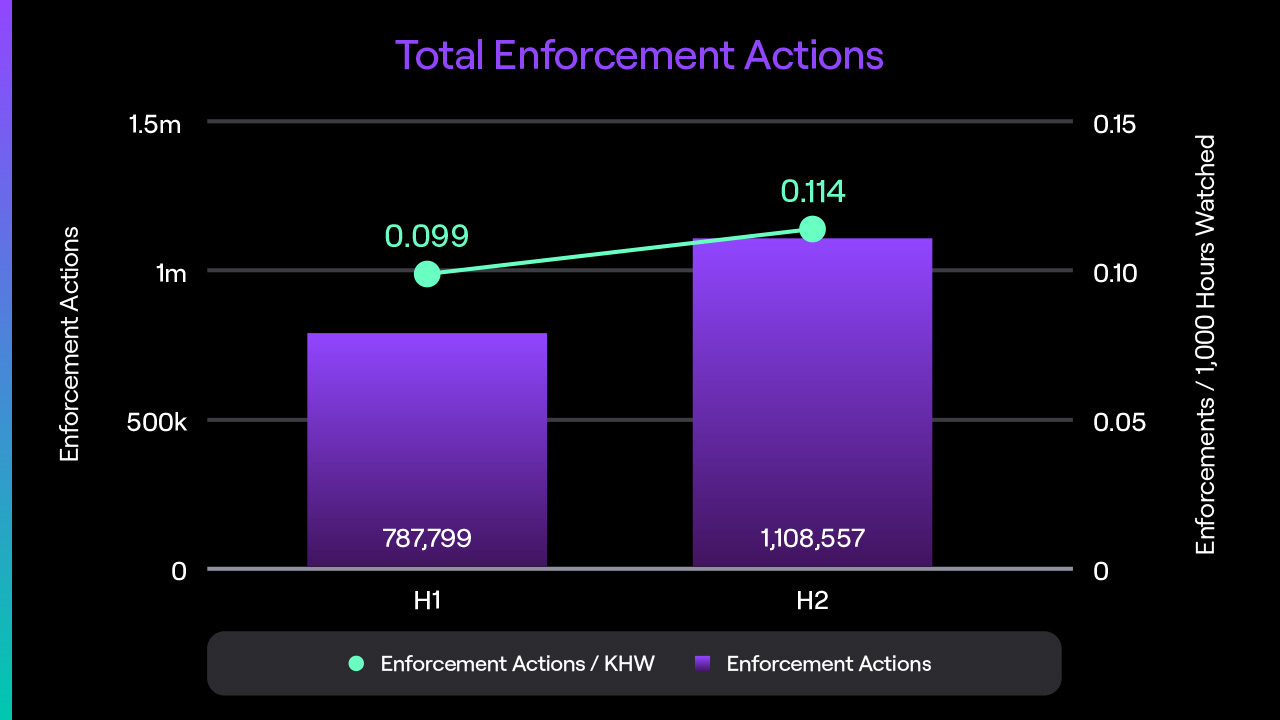

The total number of enforcement actions increased from 1.1M in H2 2020 to 1.2M in H1 2021, but the number of Enforcement Actions per Thousand Hours Watched (enforcements/KHW) decreased from 0.114 enforcements/KHW in H2 2020 to 0.095 enforcements/KHW in H1 2021. While there were increases in enforcement/KHW in a few categories (notably in “Hateful Conduct, Sexual Harassment and Harassment”, and in “Violence, Gore, Threats and other Extreme Content”), these were more than offset by decreases in the number of enforcements for Spam and Other Community Guidelines Violations, as discussed more fully below.

In the sections below, we provide data and analysis on the various types of enforcement actions that Youinroll has issued in 2020 and H1 2021.

User reports are prioritized based on a number of factors, including the classification and severity of the reported behavior, and whether or not the behavior constitutes an illegal act. Each report, regardless of priority, is sent to our content moderation team for review. If the reviewer agrees that the report demonstrates a violation of the Community Guidelines, the reviewer will issue an enforcement action against the violator’s account. The enforcement issued depends on the nature of the violation, and can range from a warning, a temporary suspension (1-30 days), or for the most serious offenses, an indefinite suspension from Youinroll . If any content that contains the violation has been recorded on our service, we will remove it.

We also maintain an appeals process, so that if a user believes an enforcement is incorrect, unwarranted or unfair, they can appeal the enforcement. Appeals are managed by a separate group of specialists within our content moderation team. For more information on account enforcement, see our Account Enforcements page.

The total number of enforcement actions increased from 1.1M in H2 2020 to 1.2M in H1 2021, but the number of Enforcement Actions per Thousand Hours Watched (enforcements/KHW) decreased from 0.114 enforcements/KHW in H2 2020 to 0.095 enforcements/KHW in H1 2021. While there were increases in enforcement/KHW in a few categories (notably in “Hateful Conduct, Sexual Harassment and Harassment”, and in “Violence, Gore, Threats and other Extreme Content”), these were more than offset by decreases in the number of enforcements for Spam and Other Community Guidelines Violations, as discussed more fully below.

In the sections below, we provide data and analysis on the various types of enforcement actions that Youinroll has issued in 2020 and H1 2021.

Hateful Conduct, Sexual Harassment, and Harassment

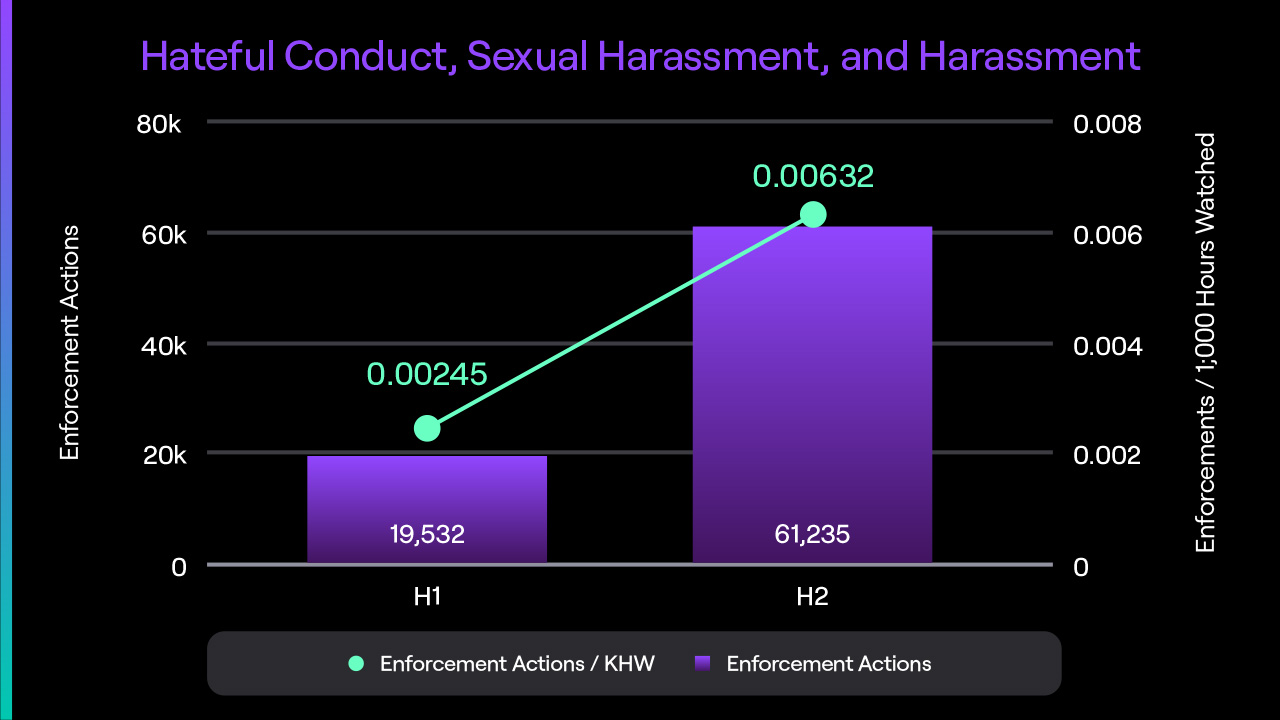

We do not tolerate conduct or speech that is hateful or harassing, or that encourages or incites others to engage in hateful or harassing conduct. This includes unwanted sexual advances and solicitations, inciting targeted community abuse, and expressions of hatred based on an identity-based protected characteristic.

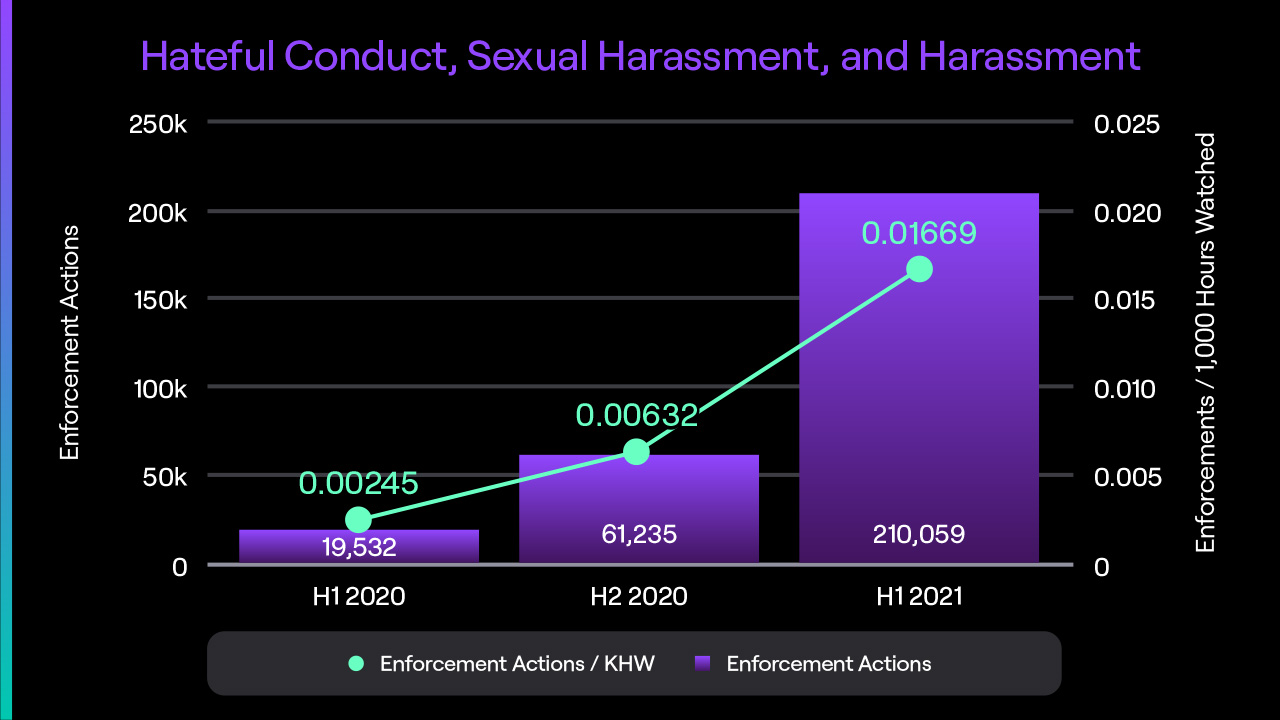

User reports for hateful conduct, sexual harassment and harassment increased by 33% from H2 2020 to H1 2021, and reports per thousand hours watched were 0.225 reports/KHW in H1 (compared to 0.220 reports/KHW in H2 2020). However, as shown in the chart below, enforcement actions in these categories have increased by 243% and 164% on both an absolute basis, and on an enforcements/KWH basis, respectively, across the same time periods. We attribute this increase in both reporting and enforcement actions to updates made to our Hateful Conduct, Sexual Harassment and Harassment policy, which launched in January 2021.

In January 2021, we began enforcing our updated Hateful Conduct, Sexual Harassment and Harassment policy, and this drove a significant increase in both the number of user reports submitted and in enforcement actions taken. The clearer examples of violative conduct that the updated policy provides improved overall comprehension of our guidelines among the community, which we saw reflected in increased validity in the reports filed. Reports in these categories were more likely to be actionable as they featured specific, relevant information related to the violation. This has allowed us to combat a wider range of online hate and harassment. More specifically, distinguishing sexual harassment as separate and distinct category from other forms of harassment both internally and externally allowed our internal safety teams to take action on more reports of instances of unwanted sexual objectification, sexual denigration, and sexual solicitations.

We do not tolerate conduct or speech that is hateful or harassing, or that encourages or incites others to engage in hateful or harassing conduct. This includes unwanted sexual advances and solicitations, inciting targeted community abuse, and expressions of hatred based on an identity-based protected characteristic.

User reports for hateful conduct, sexual harassment and harassment increased by 33% from H2 2020 to H1 2021, and reports per thousand hours watched were 0.225 reports/KHW in H1 (compared to 0.220 reports/KHW in H2 2020). However, as shown in the chart below, enforcement actions in these categories have increased by 243% and 164% on both an absolute basis, and on an enforcements/KWH basis, respectively, across the same time periods. We attribute this increase in both reporting and enforcement actions to updates made to our Hateful Conduct, Sexual Harassment and Harassment policy, which launched in January 2021.

In January 2021, we began enforcing our updated Hateful Conduct, Sexual Harassment and Harassment policy, and this drove a significant increase in both the number of user reports submitted and in enforcement actions taken. The clearer examples of violative conduct that the updated policy provides improved overall comprehension of our guidelines among the community, which we saw reflected in increased validity in the reports filed. Reports in these categories were more likely to be actionable as they featured specific, relevant information related to the violation. This has allowed us to combat a wider range of online hate and harassment. More specifically, distinguishing sexual harassment as separate and distinct category from other forms of harassment both internally and externally allowed our internal safety teams to take action on more reports of instances of unwanted sexual objectification, sexual denigration, and sexual solicitations.

Violence, Gore, Threats and Other Extreme Conduct

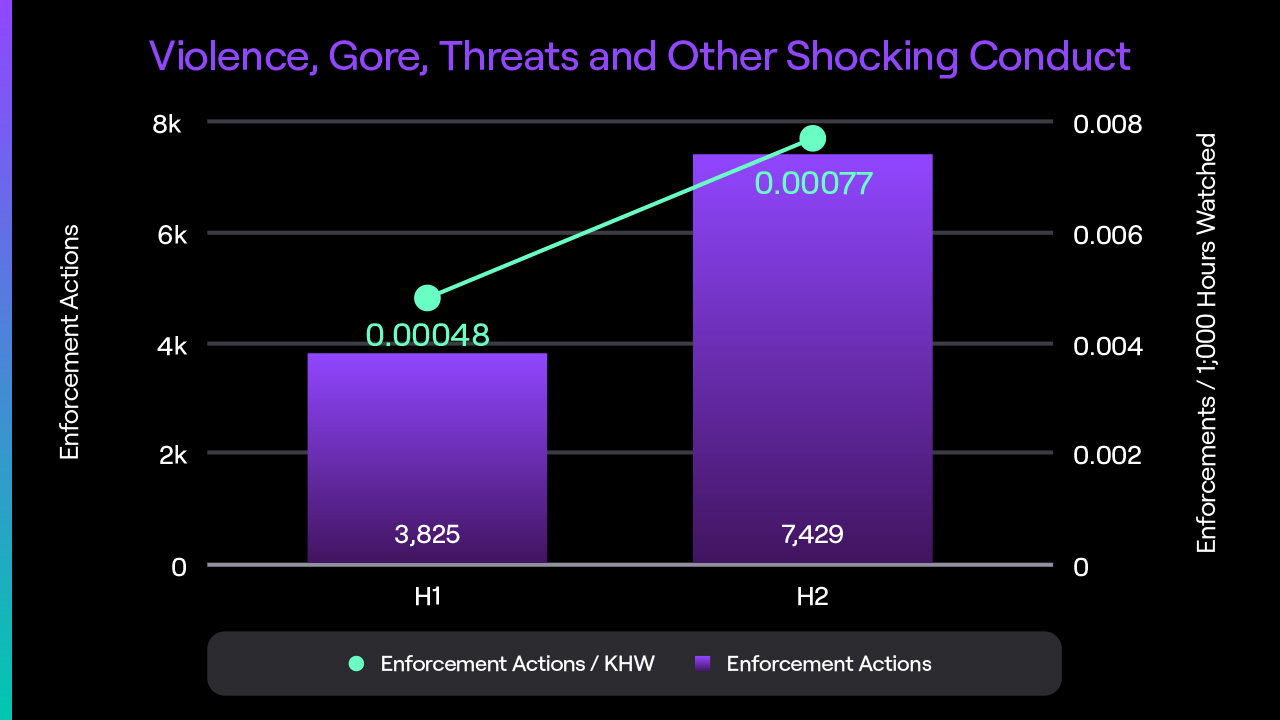

In an effort to limit community exposure to content that may be illegal, jarring or damaging, we prohibit media and conduct that focuses on extreme gore or violence, sexual violence, violent threats, self-harm behaviors, animal cruelty, dangerous or distracted driving, and other illegal, disturbing, or frightening content/conduct.

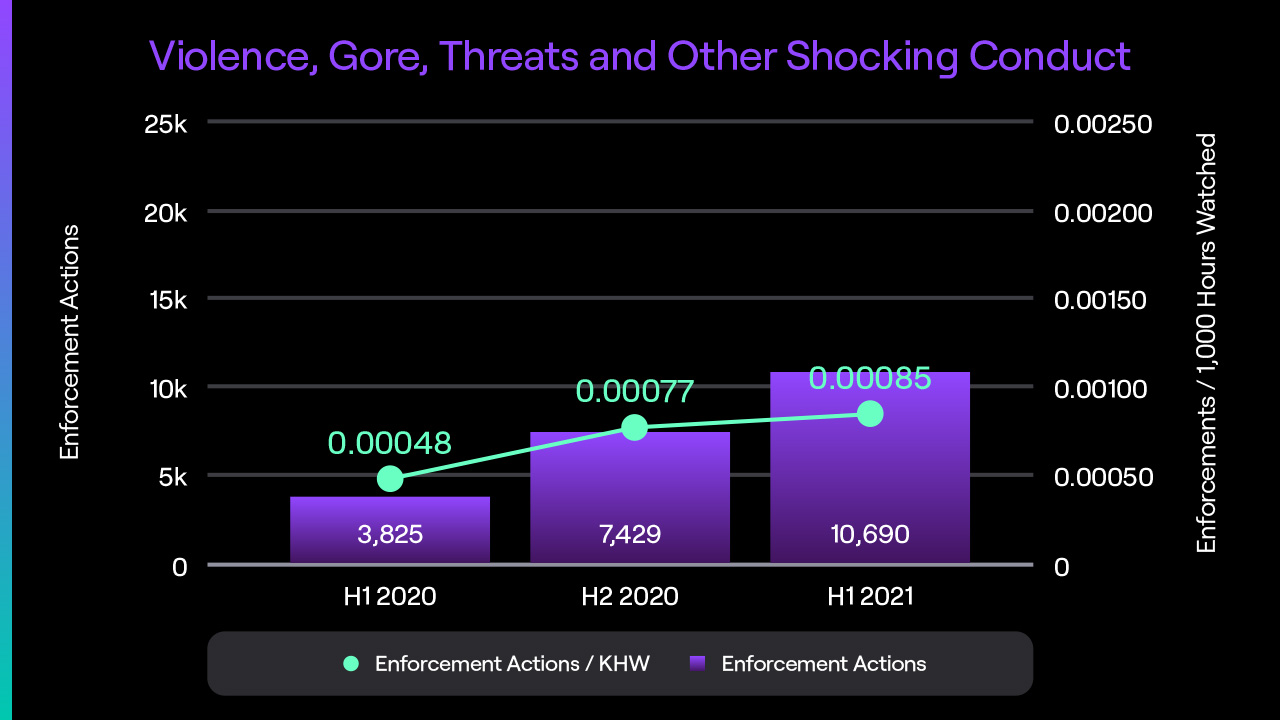

Enforcements for Violence, Gore, Threats, and Other Shocking Conduct increased from 7.4K in H2 2020 to 10.7K in H1 2021 (a 44% increase). Enforcements/KHW increased from 0.00077 to 0.00085 (a 10% increase). This change was largely driven by enforcements for Serious Violent Threats, which increased from 517 to 4,274 (an increase of 727%). This increase is attributable to a number of real world factors, including high profile protests and elections, as well as the resumption of some in-person events and activities.

In an effort to limit community exposure to content that may be illegal, jarring or damaging, we prohibit media and conduct that focuses on extreme gore or violence, sexual violence, violent threats, self-harm behaviors, animal cruelty, dangerous or distracted driving, and other illegal, disturbing, or frightening content/conduct.

Enforcements for Violence, Gore, Threats, and Other Shocking Conduct increased from 7.4K in H2 2020 to 10.7K in H1 2021 (a 44% increase). Enforcements/KHW increased from 0.00077 to 0.00085 (a 10% increase). This change was largely driven by enforcements for Serious Violent Threats, which increased from 517 to 4,274 (an increase of 727%). This increase is attributable to a number of real world factors, including high profile protests and elections, as well as the resumption of some in-person events and activities.

Terrorism, Terrorist Propaganda, and Recruitment

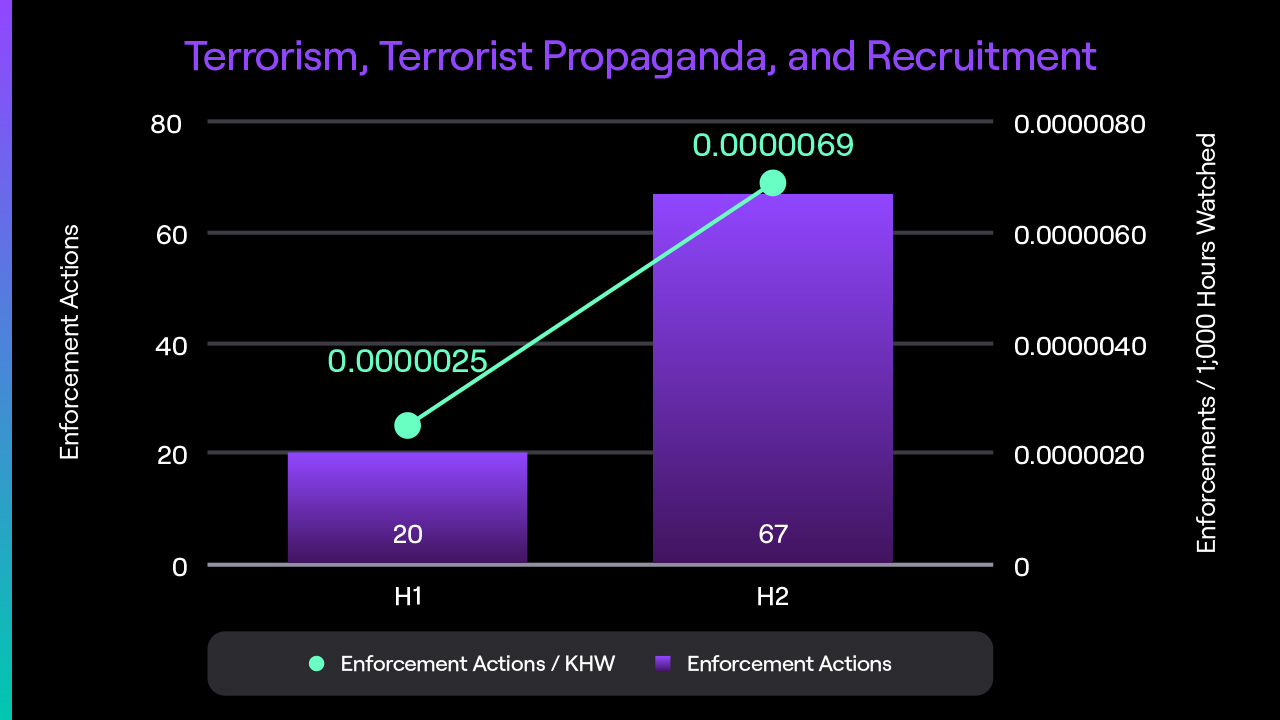

Youinroll does not allow content that depicts, glorifies, encourages, or supports terrorism, or violent extremist actors or acts. This includes threatening to or encouraging others to commit acts that would result in serious physical harm to groups of people or significant property destruction. This also includes displaying or linking to terrorist or extremist propaganda, even for the purposes of denouncing such content.

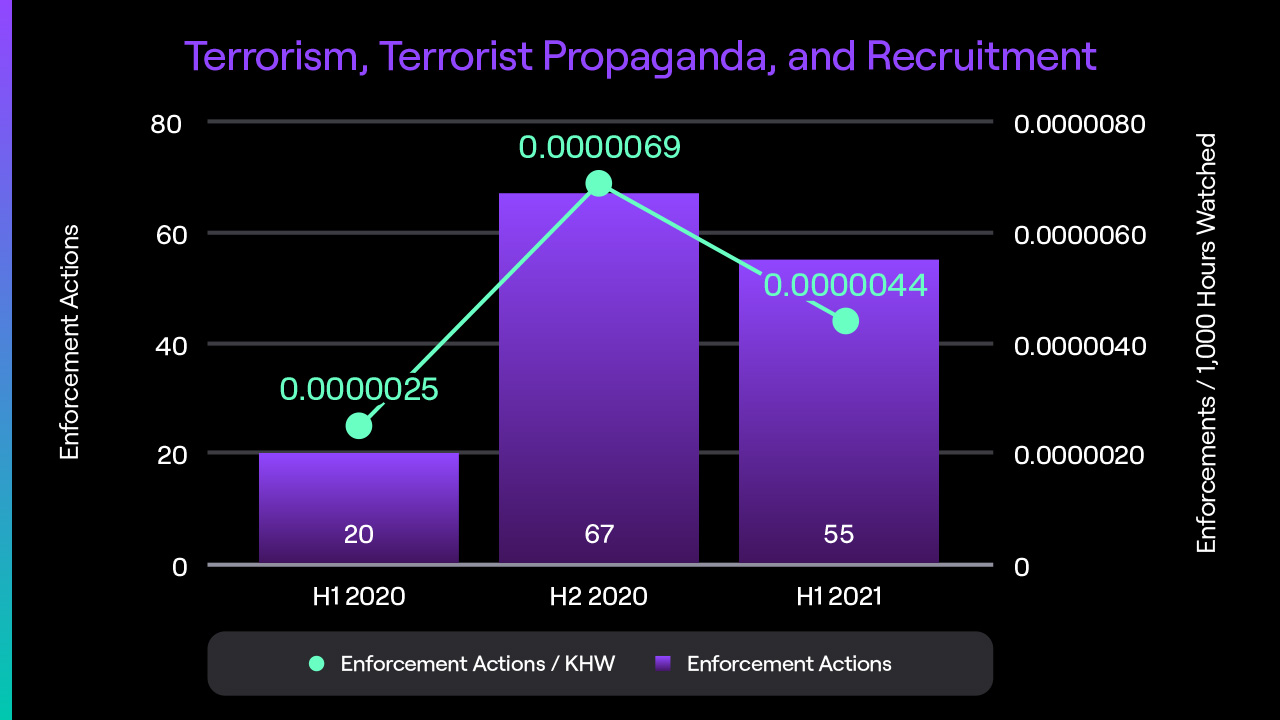

We receive few reports in this category, and issue few enforcements, as the numbers in the chart above show. Nevertheless, we consider this type of conduct to be of the highest severity. In H1 2021, enforcements for this category decreased from 67 to 55 (an 18% decrease) and enforcements/KHW have decreased from 0.0000069 enforcements/KHW to 0.0000044 enforcements/KHW (a 36% decrease). All of the enforcements in this category in H1 2021 were for terrorist propaganda. Despite the decreasing numbers, we continued to invest in this area throughout 2021, increasing the capacity and resources available to our Law Enforcement Response (LER) team, which handles reports in this category.

Youinroll does not allow content that depicts, glorifies, encourages, or supports terrorism, or violent extremist actors or acts. This includes threatening to or encouraging others to commit acts that would result in serious physical harm to groups of people or significant property destruction. This also includes displaying or linking to terrorist or extremist propaganda, even for the purposes of denouncing such content.

We receive few reports in this category, and issue few enforcements, as the numbers in the chart above show. Nevertheless, we consider this type of conduct to be of the highest severity. In H1 2021, enforcements for this category decreased from 67 to 55 (an 18% decrease) and enforcements/KHW have decreased from 0.0000069 enforcements/KHW to 0.0000044 enforcements/KHW (a 36% decrease). All of the enforcements in this category in H1 2021 were for terrorist propaganda. Despite the decreasing numbers, we continued to invest in this area throughout 2021, increasing the capacity and resources available to our Law Enforcement Response (LER) team, which handles reports in this category.

Adult Nudity, Pornography and Sexual Conduct

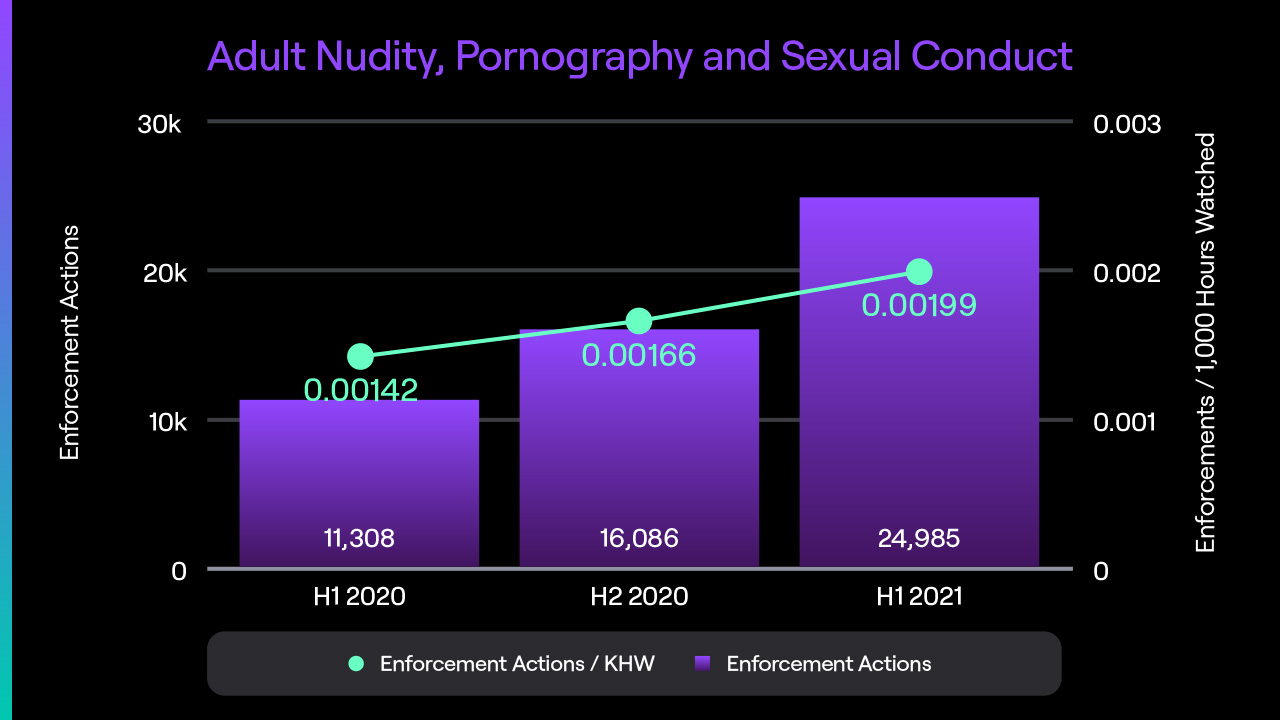

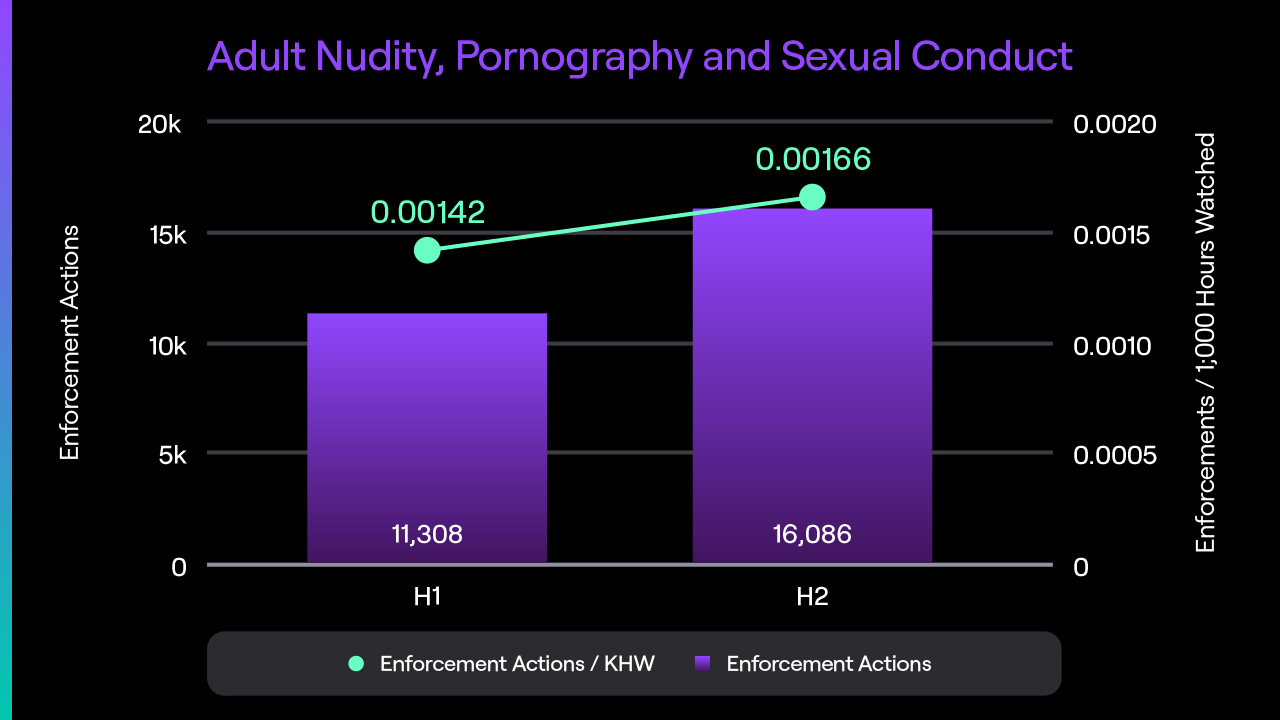

We limit community exposure to content that is not appropriate for a diverse audience. This includes restricting content that involves nudity, insufficient coverage of the body, inappropriate attire or is overly sexual in nature.

Enforcement actions increased from 16K in H2 2020 to 25K in H1 2021 (a 55% increase). On a reports per thousand-hours-watched basis, enforcements increased from 0.00166 enforcements/KHW to 0.00199 enforcements/KHW (a 20% increase). The increase can be attributed in part to a 2.9K increase in the number of enforcements against detailed ASCII depictions of body parts and sex acts within chat content. There was also a significant increase in the number of enforcements for pornography, which we believe was driven in part by improvements in our ability to proactively detect pornographic content.

We limit community exposure to content that is not appropriate for a diverse audience. This includes restricting content that involves nudity, insufficient coverage of the body, inappropriate attire or is overly sexual in nature.

Enforcement actions increased from 16K in H2 2020 to 25K in H1 2021 (a 55% increase). On a reports per thousand-hours-watched basis, enforcements increased from 0.00166 enforcements/KHW to 0.00199 enforcements/KHW (a 20% increase). The increase can be attributed in part to a 2.9K increase in the number of enforcements against detailed ASCII depictions of body parts and sex acts within chat content. There was also a significant increase in the number of enforcements for pornography, which we believe was driven in part by improvements in our ability to proactively detect pornographic content.

Spam and Other Community Guidelines Violations

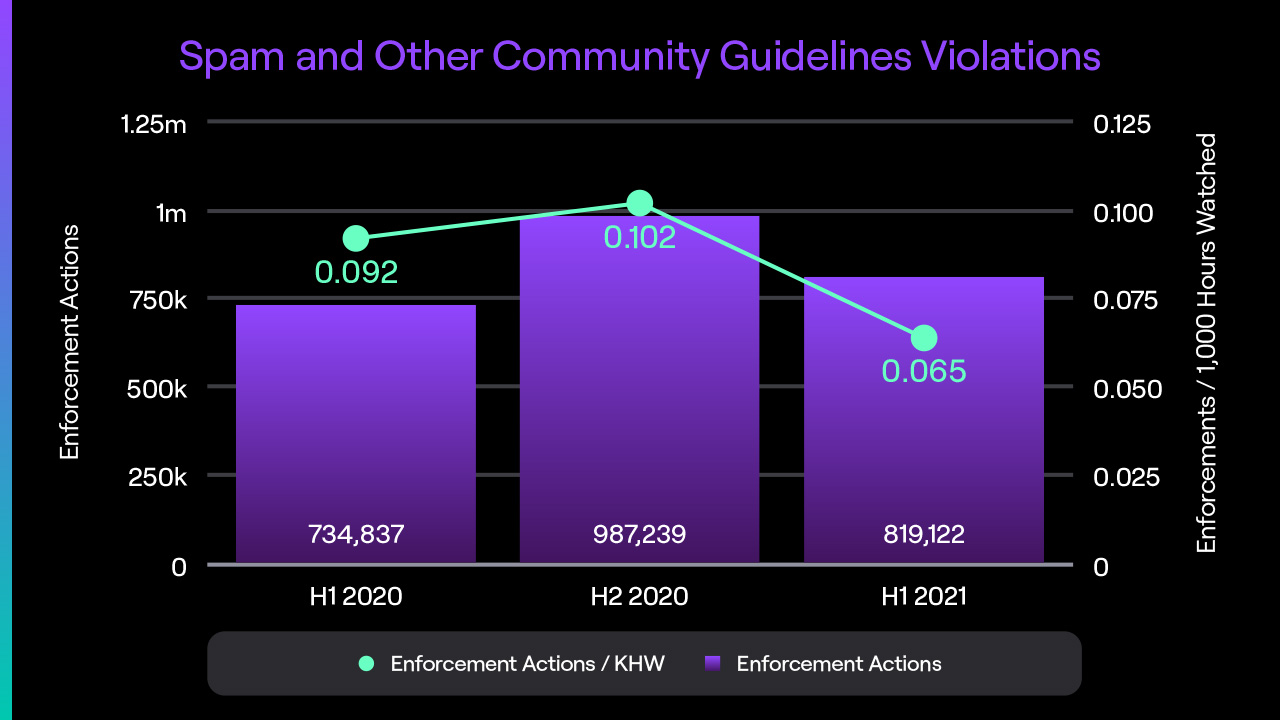

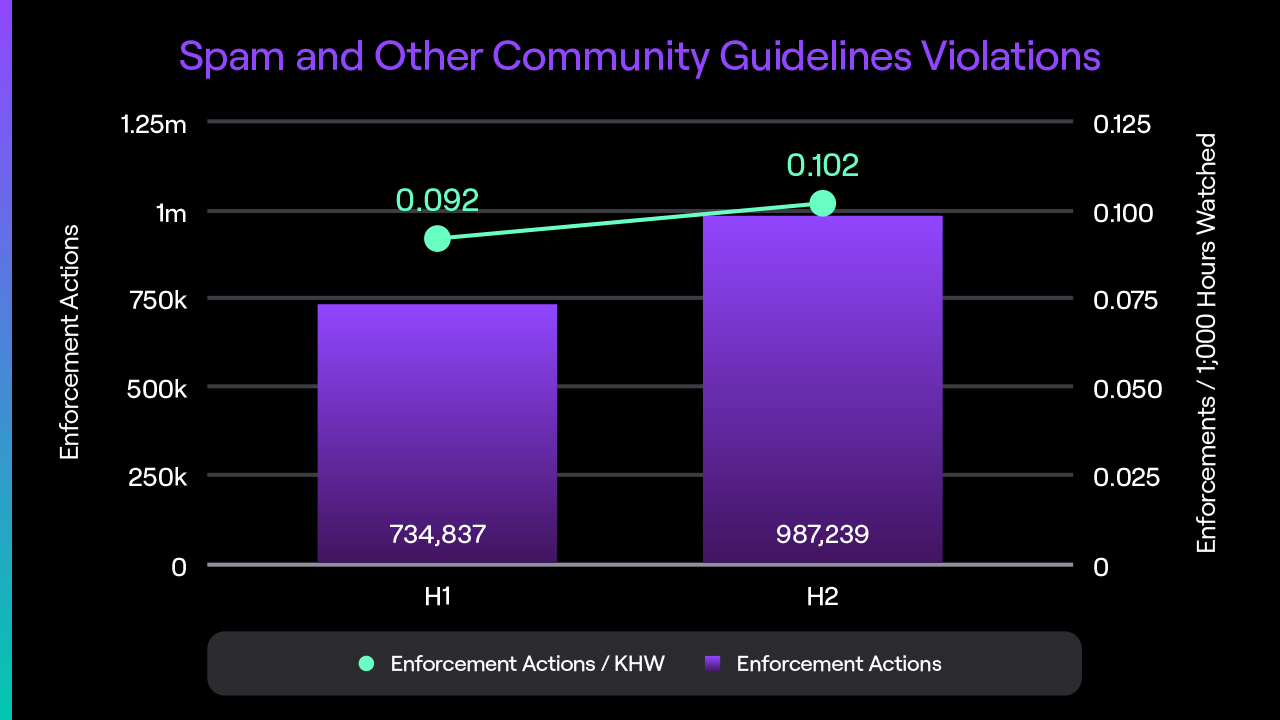

Youinroll prohiRoll disruptive activities such as spamming, because these types of activities violate the integrity of Youinroll services, and diminish users’ experiences on Youinroll . We also do not allow other dishonest or inappropriate behaviors such as: impersonation, broadcasting others against their wishes, ban evasion, misuse of Youinroll tools, intentionally miscategorizing a stream, cheating on a game or playing a prohibited game, inappropriate usernames, and underaged user accounts.

Total enforcements in this category decreased from 987K in H2 2020 to 819K in H1 2021 (a 17% decrease). Enforcements/KHW decreased from 0.102 enforcements/KHW in H2 2020 to 0.065 enforcements/KHW in H1 2021 (a 36% decrease).

In addition to the enforcement actions listed, Youinroll uses technology to proactively identify large numbers of bot accounts. These accounts, which are typically used to artificially inflate follow counts and/or harass users, are identified and terminated. These actions are not included in the figures listed above because they do not stem from reports or machine detection of harmful content. In H1 2021, Youinroll took bulk actions resulting in the termination of 15.2 million bot accounts.

Youinroll prohiRoll disruptive activities such as spamming, because these types of activities violate the integrity of Youinroll services, and diminish users’ experiences on Youinroll . We also do not allow other dishonest or inappropriate behaviors such as: impersonation, broadcasting others against their wishes, ban evasion, misuse of Youinroll tools, intentionally miscategorizing a stream, cheating on a game or playing a prohibited game, inappropriate usernames, and underaged user accounts.

Total enforcements in this category decreased from 987K in H2 2020 to 819K in H1 2021 (a 17% decrease). Enforcements/KHW decreased from 0.102 enforcements/KHW in H2 2020 to 0.065 enforcements/KHW in H1 2021 (a 36% decrease).

In addition to the enforcement actions listed, Youinroll uses technology to proactively identify large numbers of bot accounts. These accounts, which are typically used to artificially inflate follow counts and/or harass users, are identified and terminated. These actions are not included in the figures listed above because they do not stem from reports or machine detection of harmful content. In H1 2021, Youinroll took bulk actions resulting in the termination of 15.2 million bot accounts.

Law Enforcement and Government Requests

Overview

Youinroll Law Enforcement Response (LER) team is responsible for handling all cases related to any harm against a minor, escalation of violent threats or terrorist acts to appropriate authorities, any other legally required reporting to law enforcement, and responding to requests for user data from law enforcement agencies. Cases of these types are escalated to the Law Enforcement Response team from our content moderation team.

The LER team is also responsible for investigating reports of violations of Youinroll new Off-Service Conduct Policy, which launched in April 2021 and addresses serious offenses that pose a physical safety risk to the Youinroll community or Youinroll staff, even if these offenses occur entirely off Youinroll . The policy covers offenses such as extreme or deadly violence, acts of terrorism, leadership or membership in a known hate group, sexual assault, the sexual exploitation of minors, and other acts or credible threats that endanger members of the Youinroll community. These reports are made directly to the LER team through a dedicated reporting portal, or are referred to the LER team from the content moderation team if the offense is reported through Youinroll user reporting tool.

Youinroll Law Enforcement Response (LER) team is responsible for handling all cases related to any harm against a minor, escalation of violent threats or terrorist acts to appropriate authorities, any other legally required reporting to law enforcement, and responding to requests for user data from law enforcement agencies. Cases of these types are escalated to the Law Enforcement Response team from our content moderation team.

The LER team is also responsible for investigating reports of violations of Youinroll new Off-Service Conduct Policy, which launched in April 2021 and addresses serious offenses that pose a physical safety risk to the Youinroll community or Youinroll staff, even if these offenses occur entirely off Youinroll . The policy covers offenses such as extreme or deadly violence, acts of terrorism, leadership or membership in a known hate group, sexual assault, the sexual exploitation of minors, and other acts or credible threats that endanger members of the Youinroll community. These reports are made directly to the LER team through a dedicated reporting portal, or are referred to the LER team from the content moderation team if the offense is reported through Youinroll user reporting tool.

NCMEC Reporting; Global Cooperation

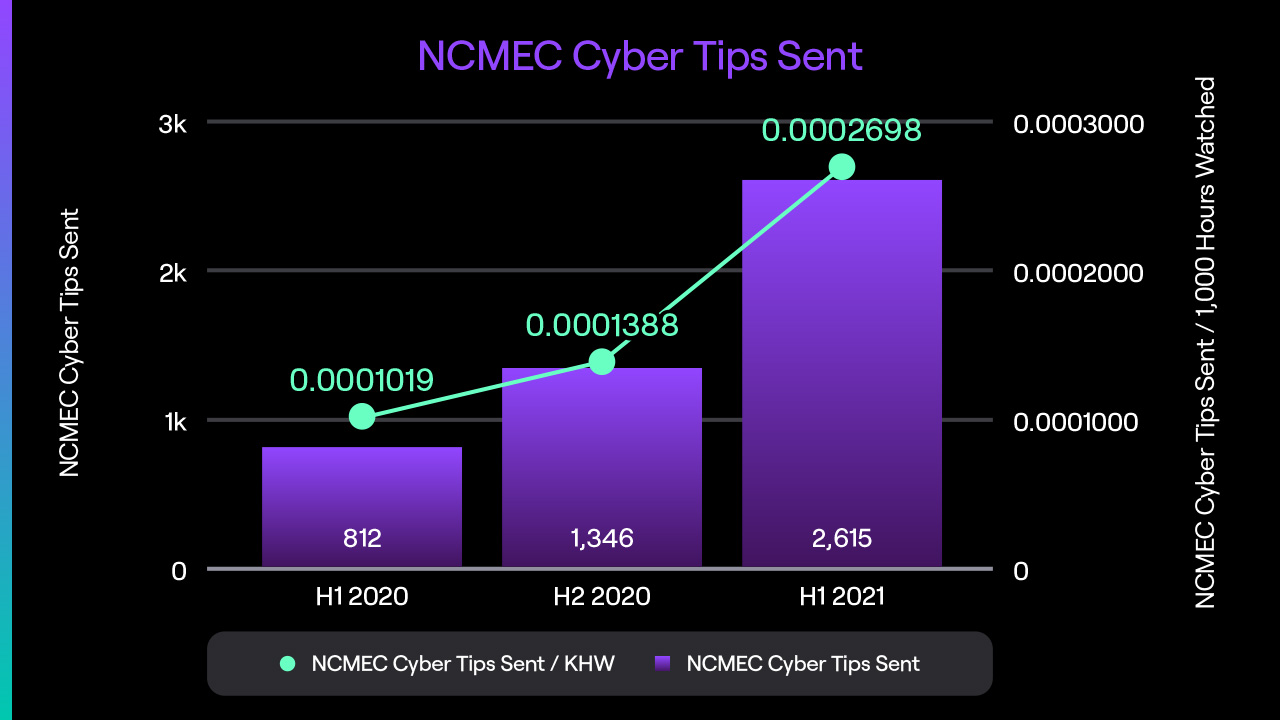

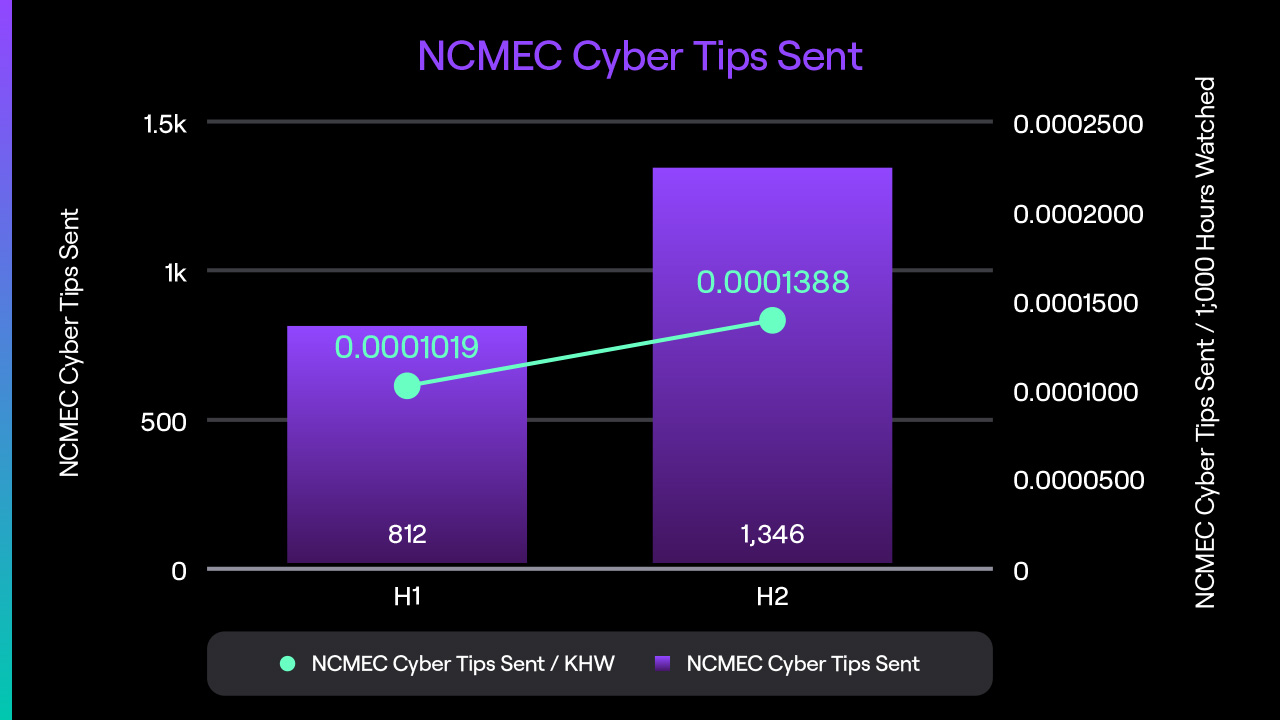

Youinroll does not tolerate sexual exploitation of minors. When we are made aware of media depicting sexual exploitation of a minor, or grooming behavior, we remove the content, investigate, and report to authorities via the National Center for Missing & Exploited Children (NCMEC). We also work directly with aligned organizations throughout the world, such as INHOPE and ICMEC, to address and prevent exploitation media and grooming of minors from occurring on Youinroll .

The number of NCMEC Cyber Tips submitted by Youinroll increased from 1,346 in H2 2020 to 2,615 in H1 2021 (a 94% increase). This also equates to a 94% increase in tips per thousand hours watched. Between October 2020 to June 2021, we have quadrupled the capacity of our Law Enforcement Response team (LER); these investments have enabled us to go deeper in our investigative work, and identify more victims and offenders with each case, which promotes a safer service overall.

Youinroll does not tolerate sexual exploitation of minors. When we are made aware of media depicting sexual exploitation of a minor, or grooming behavior, we remove the content, investigate, and report to authorities via the National Center for Missing & Exploited Children (NCMEC). We also work directly with aligned organizations throughout the world, such as INHOPE and ICMEC, to address and prevent exploitation media and grooming of minors from occurring on Youinroll .

The number of NCMEC Cyber Tips submitted by Youinroll increased from 1,346 in H2 2020 to 2,615 in H1 2021 (a 94% increase). This also equates to a 94% increase in tips per thousand hours watched. Between October 2020 to June 2021, we have quadrupled the capacity of our Law Enforcement Response team (LER); these investments have enabled us to go deeper in our investigative work, and identify more victims and offenders with each case, which promotes a safer service overall.

Escalations to Law Enforcement

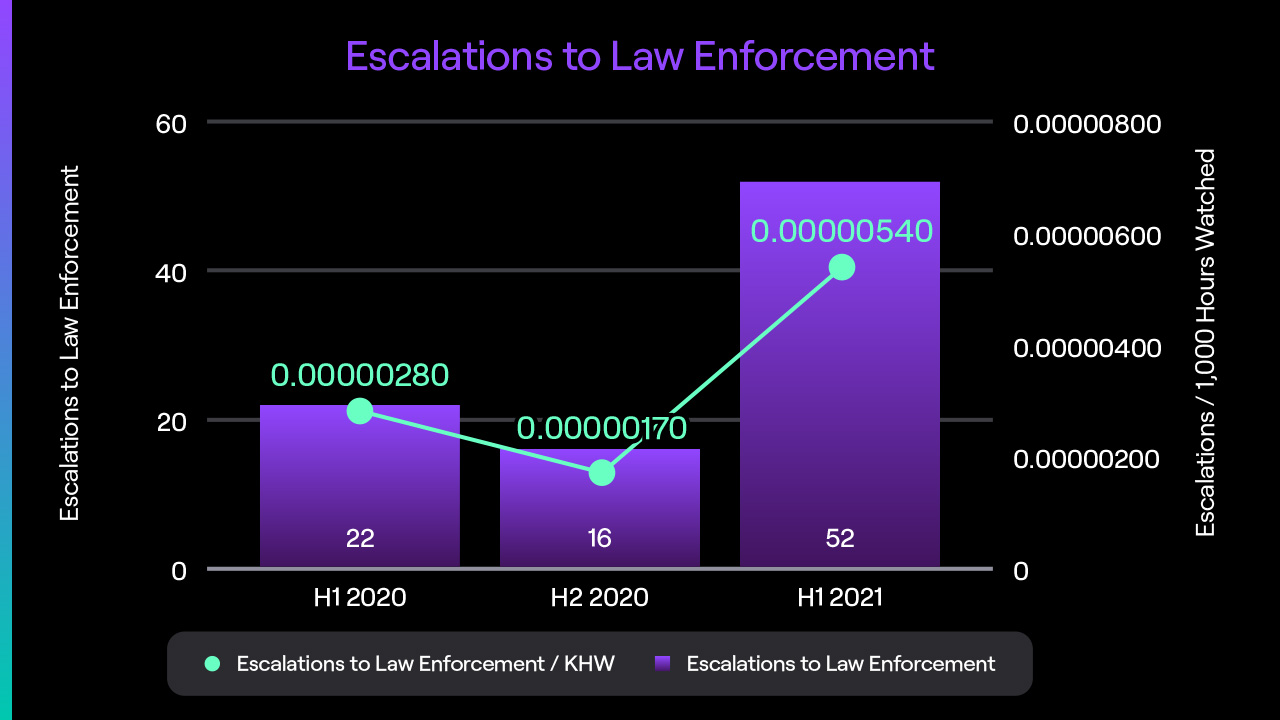

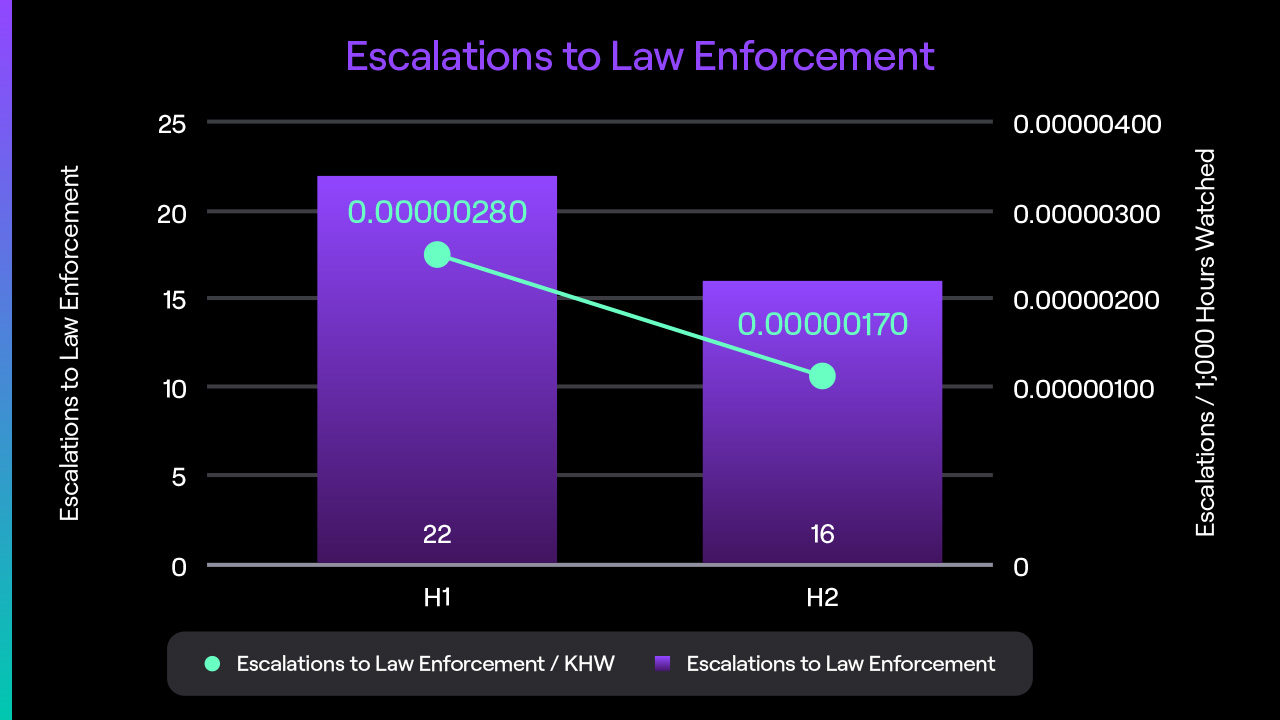

When Youinroll identifies credible threats of violence, a member of our Law Enforcement Response team will proactively send, or “escalate,” user data to appropriate law enforcement agencies.

Escalations to Law Enforcement increased from 16 in H2 2020 to 52 in H1 2021 (a 225% increase). This equates to a 218% increase in escalations per thousand hours watched, indicated by the red line in the chart above. Throughout 2020, we saw a dip in the number of escalations to law enforcement. At the height of the COVID-19 pandemic, public and social gatherings were restricted, which limited the potential for real world harm that would require escalation to law enforcement. As we look at results for H1 2021 as compared to pre-2020, the data suggests that 2020 was an outlier due to the pandemic. We will continue to closely monitor this area as public activities and gatherings become more prevalent.

When Youinroll identifies credible threats of violence, a member of our Law Enforcement Response team will proactively send, or “escalate,” user data to appropriate law enforcement agencies.

Escalations to Law Enforcement increased from 16 in H2 2020 to 52 in H1 2021 (a 225% increase). This equates to a 218% increase in escalations per thousand hours watched, indicated by the red line in the chart above. Throughout 2020, we saw a dip in the number of escalations to law enforcement. At the height of the COVID-19 pandemic, public and social gatherings were restricted, which limited the potential for real world harm that would require escalation to law enforcement. As we look at results for H1 2021 as compared to pre-2020, the data suggests that 2020 was an outlier due to the pandemic. We will continue to closely monitor this area as public activities and gatherings become more prevalent.

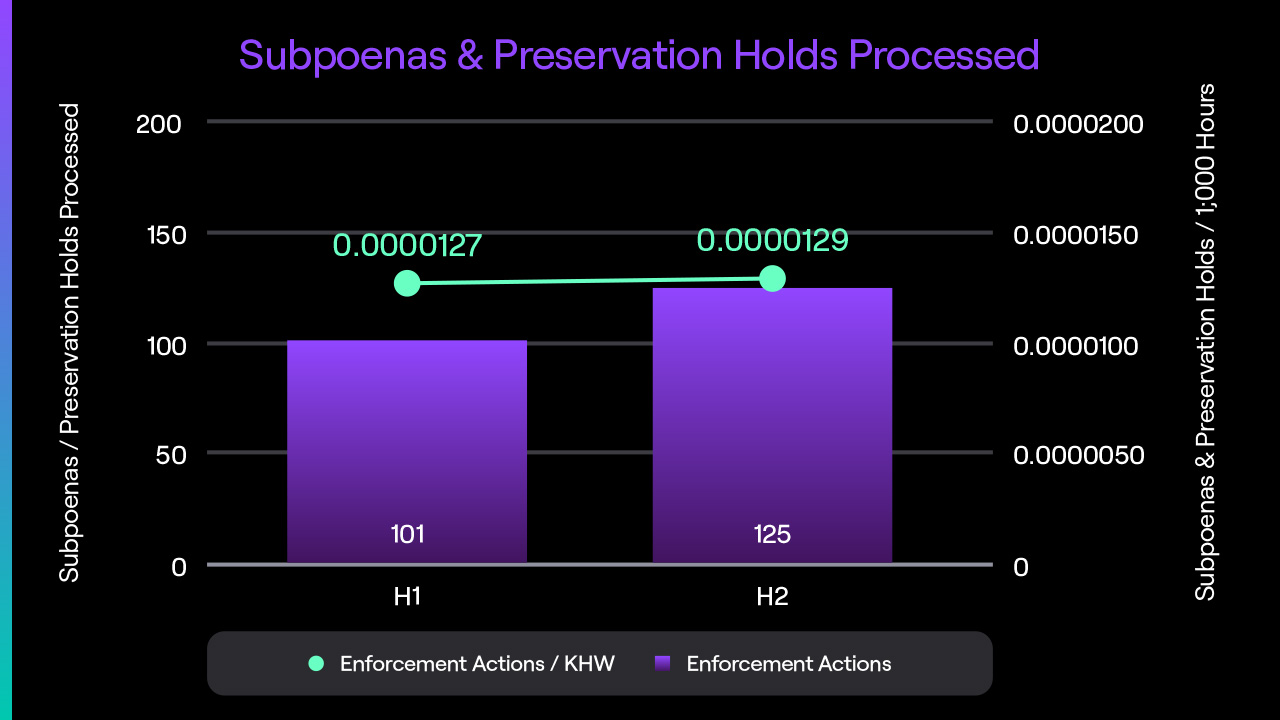

Subpoenas/Preservation Holds

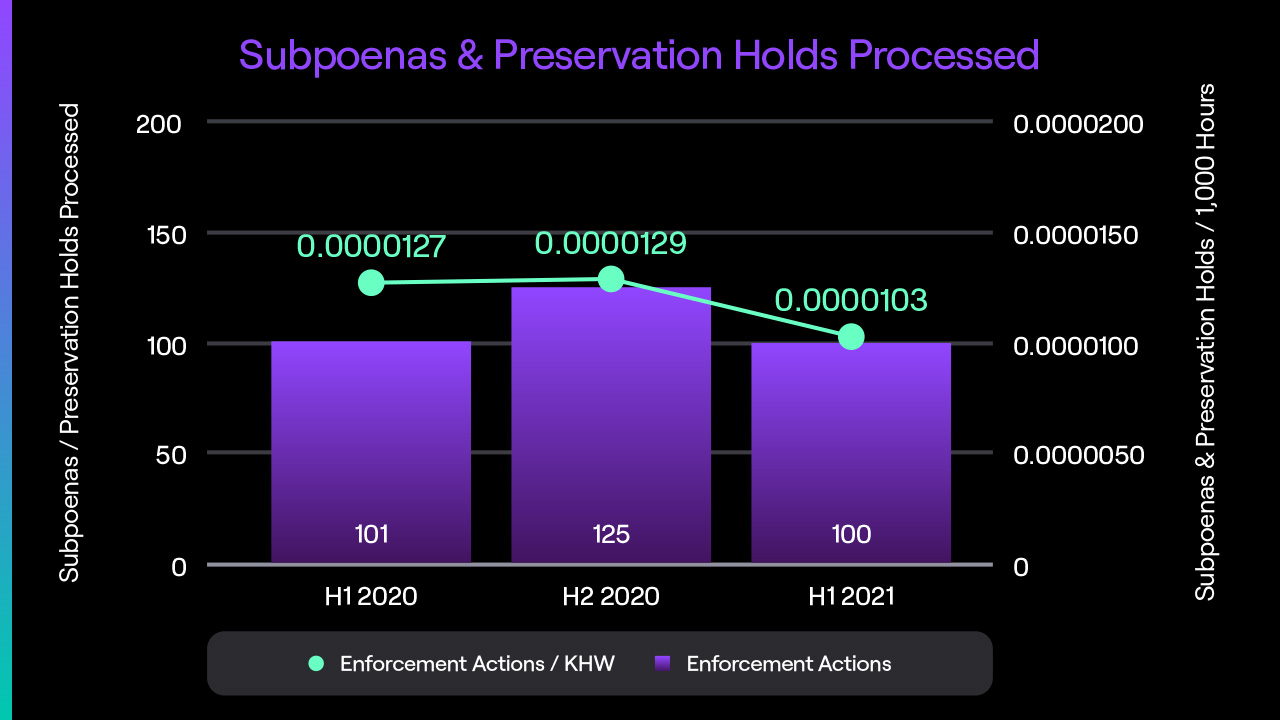

Youinroll complies with data requests from law enforcement around the world in relation to crimes they may be investigating. We do so using our criminal subpoena and MLAT (“Mutual Legal Assistance Treaty”) process which requires all data requests to be served through our process server, CSC.

Subpoenas and preservation hold requests processed by Youinroll decreased from 125 in H2 2020 to 100 in H1 2021 (a 20% decrease). Subpoenas and preservation hold requests per thousand hours watched (subpoenas/KHW) also decreased by 20%, from 0.0000129 subpoenas/KHW in H2 2020 to 0.0000103 subpoenas/KHW in H1 2021. This is within the expected variance for the number of valid subpoenas and reservation hold requests, and aligns with volumes we have received from law enforcement in the past.

Youinroll complies with data requests from law enforcement around the world in relation to crimes they may be investigating. We do so using our criminal subpoena and MLAT (“Mutual Legal Assistance Treaty”) process which requires all data requests to be served through our process server, CSC.

Subpoenas and preservation hold requests processed by Youinroll decreased from 125 in H2 2020 to 100 in H1 2021 (a 20% decrease). Subpoenas and preservation hold requests per thousand hours watched (subpoenas/KHW) also decreased by 20%, from 0.0000129 subpoenas/KHW in H2 2020 to 0.0000103 subpoenas/KHW in H1 2021. This is within the expected variance for the number of valid subpoenas and reservation hold requests, and aligns with volumes we have received from law enforcement in the past.

2020 Transparency Report

Note: This transparency report is the first of its kind for Youinroll : it takes a hard look at how we think about safety; the product choices we made to create a safe space for all our communities, and how our safety staff, community moderators, and technological solutions help enforce the rules we set. The result is a wide-ranging overview of service-specific data intended to help give readers a meaningful understanding of safety-related matters on Youinroll and the progress we are making. Future reports will build upon our endeavor of making Youinroll an even safer place.

Community Guidelines: In 2020, we’ve updated several key areas of our Community Guidelines with the intent to provide more clarity and make the policies easier to apply. To accomplish this we provide descriptions of prohibited behavior, further clarified with examples and exceptions. Key updates in 2020 included:

- Nudity and Attire - revised guidelines rolled-out in April 2020;

- Terrorism and Extreme Violence - updated guidelines launched in October 2020;

- Harassment and Hateful Conduct - published revised guidelines in December 2020 that took effect in January 2021.

In our updates to the Nudity and Attire and the Harassment and Hateful Conduct policies, we started by convening and gathering feedback from focus groups made up of a diverse set of Youinroll creators. We also reviewed draft guidelines with our Safety Advisory Council - an eight-member group of creators, academics, and NGO leaders. These steps helped to clarify our guidelines and better reflect the standards and ideals of the Youinroll community. We recognize that our service, our community, and the world we live in are not static, and as such we will continue to review and evolve our standards and expectations, and update our Community Guidelines to reflect this evolution.

Operational Capacity: We are committed to ensuring that review of safety reports happens in a timely manner and have invested heavily in increasing our capacity. Over the past year alone, we have made a 4X increase in the number of content moderation professionals available to respond to user reports.

Note: This transparency report is the first of its kind for Youinroll : it takes a hard look at how we think about safety; the product choices we made to create a safe space for all our communities, and how our safety staff, community moderators, and technological solutions help enforce the rules we set. The result is a wide-ranging overview of service-specific data intended to help give readers a meaningful understanding of safety-related matters on Youinroll and the progress we are making. Future reports will build upon our endeavor of making Youinroll an even safer place.

Community Guidelines: In 2020, we’ve updated several key areas of our Community Guidelines with the intent to provide more clarity and make the policies easier to apply. To accomplish this we provide descriptions of prohibited behavior, further clarified with examples and exceptions. Key updates in 2020 included:

- Nudity and Attire - revised guidelines rolled-out in April 2020;

- Terrorism and Extreme Violence - updated guidelines launched in October 2020;

- Harassment and Hateful Conduct - published revised guidelines in December 2020 that took effect in January 2021.

In our updates to the Nudity and Attire and the Harassment and Hateful Conduct policies, we started by convening and gathering feedback from focus groups made up of a diverse set of Youinroll creators. We also reviewed draft guidelines with our Safety Advisory Council - an eight-member group of creators, academics, and NGO leaders. These steps helped to clarify our guidelines and better reflect the standards and ideals of the Youinroll community. We recognize that our service, our community, and the world we live in are not static, and as such we will continue to review and evolve our standards and expectations, and update our Community Guidelines to reflect this evolution.

Operational Capacity: We are committed to ensuring that review of safety reports happens in a timely manner and have invested heavily in increasing our capacity. Over the past year alone, we have made a 4X increase in the number of content moderation professionals available to respond to user reports.

Moderation in Channels: Coverage, Removals and Enforcements

Overview

On Youinroll , we empower creators to build communities that are unique and personal, but paired with that freedom is the expectation that those communities must be healthy and abide by the Youinroll Community Guidelines. To accomplish this, many Youinroll creators ask trusted members of their communities to help moderate chat in the creator’s channel. These channel moderators (“mods”) and moderation tools are the foundation of chat moderation in every creator’s Youinroll channel. To make this model work, we invest heavily to provide our creators and their mods with tools that are flexible and powerful enough to enforce both Youinroll s Community Guidelines and the channel-specific standards established by the creator. Our suite of moderation tools supports two objectives: identifying potentially harmful content for moderator review, and scaling moderator controls to support fast-moving Youinroll chat messages.

Creators and their mods can use tools- provided by Youinroll - to manage who can chat in their channel and what content can be seen in chat. To manage who is actively participating in their community, creators and their mods can remove bad actors from chat by issuing temporary and permanent bans - these bans delete a chatter’s recent messages from the channel, and prevent them from sending further messages in the channel during the time they are suspended. Creators and mods can also change certain settings to restrict who can chat to more trusted groups such as followers or subscribers only. Mods can send their own chat messages, which carry their green Moderator badge, to guide the tone of the chat. To control what messages can be seen in chat, creators and mods utilize two core features: AutoMod and Blocked Terms. When enabled, AutoMod pre-screens chat messages and holds messages that contain content detected as risky, preventing them from being visible on the channel unless they are approved by a mod. Blocked Terms allow creators to further tailor AutoMod by adding custom terms or phrases that will be always blocked in their channel. These features are best utilized through Mod View, a customizable channel interface that provides mods with a toolbelt of ‘widgets’ for moderation tasks like reviewing messages held by AutoMod, keeping tabs on actions taken by other mods on their team, changing moderation settings, and more.

It’s important to remember that actions taken by a creator and their moderator(s) can only affect a user’s access in that channel. Channel bans, time-outs and chat deletion only apply within a channel, and do not affect the user’s access to other channels or other parts of the Youinroll service. However, creators and moderators (or any Youinroll user) can report conduct that violates our Community Guidelines through the Youinroll reporting tool, which can then be actioned on a service-wide basis by Youinroll moderation staff.

The following sections provide additional information regarding how creators and their moderators set and enforce standards for the chat in their own channels.

On Youinroll , we empower creators to build communities that are unique and personal, but paired with that freedom is the expectation that those communities must be healthy and abide by the Youinroll Community Guidelines. To accomplish this, many Youinroll creators ask trusted members of their communities to help moderate chat in the creator’s channel. These channel moderators (“mods”) and moderation tools are the foundation of chat moderation in every creator’s Youinroll channel. To make this model work, we invest heavily to provide our creators and their mods with tools that are flexible and powerful enough to enforce both Youinroll s Community Guidelines and the channel-specific standards established by the creator. Our suite of moderation tools supports two objectives: identifying potentially harmful content for moderator review, and scaling moderator controls to support fast-moving Youinroll chat messages.

Creators and their mods can use tools- provided by Youinroll - to manage who can chat in their channel and what content can be seen in chat. To manage who is actively participating in their community, creators and their mods can remove bad actors from chat by issuing temporary and permanent bans - these bans delete a chatter’s recent messages from the channel, and prevent them from sending further messages in the channel during the time they are suspended. Creators and mods can also change certain settings to restrict who can chat to more trusted groups such as followers or subscribers only. Mods can send their own chat messages, which carry their green Moderator badge, to guide the tone of the chat. To control what messages can be seen in chat, creators and mods utilize two core features: AutoMod and Blocked Terms. When enabled, AutoMod pre-screens chat messages and holds messages that contain content detected as risky, preventing them from being visible on the channel unless they are approved by a mod. Blocked Terms allow creators to further tailor AutoMod by adding custom terms or phrases that will be always blocked in their channel. These features are best utilized through Mod View, a customizable channel interface that provides mods with a toolbelt of ‘widgets’ for moderation tasks like reviewing messages held by AutoMod, keeping tabs on actions taken by other mods on their team, changing moderation settings, and more.

It’s important to remember that actions taken by a creator and their moderator(s) can only affect a user’s access in that channel. Channel bans, time-outs and chat deletion only apply within a channel, and do not affect the user’s access to other channels or other parts of the Youinroll service. However, creators and moderators (or any Youinroll user) can report conduct that violates our Community Guidelines through the Youinroll reporting tool, which can then be actioned on a service-wide basis by Youinroll moderation staff.

The following sections provide additional information regarding how creators and their moderators set and enforce standards for the chat in their own channels.

Moderation of Chat

The overwhelming majority of user interaction on Youinroll occurs in channels that are moderated by channel moderators, AutoMod, or both.

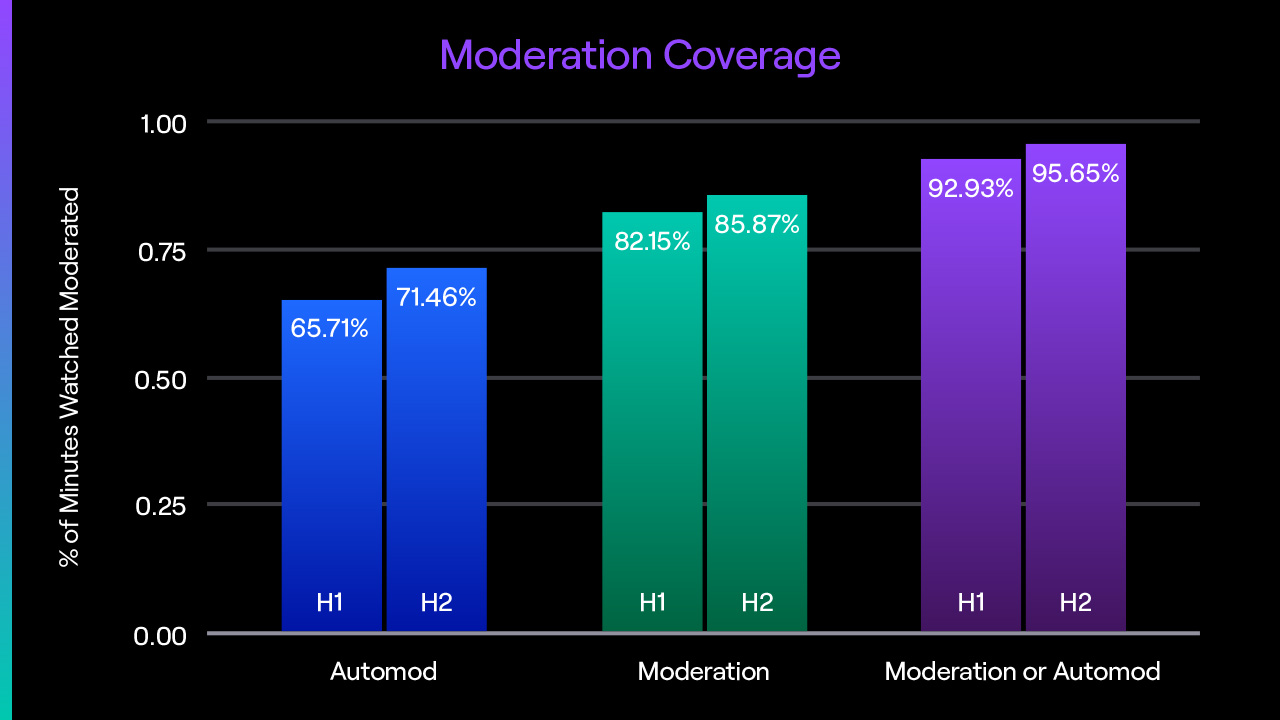

In (H1) 2020, 65% of live content viewed on Youinroll (measured in terms of minutes watched) occurred on channels that had Youinroll AutoMod feature actively monitoring chat for harmful messages; in H2 2020, this increased to 71%. We believe this substantial increase can largely be attributed to having AutoMod enabled by default for new channels in H2 2020 that did not have any assigned users as channel moderators. As shown in the chart above, the percentage of hours watched in channels that had at least one active moderator increased slightly, from 82% in H1 to 86% in H2 - we believe this high percentage shows that larger channels are very likely to have active moderators. Most importantly, throughout 2020, over 92% of live content viewed on Youinroll occurred in channels with chat that was moderated either by active moderators, or AutoMod, or both; and that coverage increased to over 95% in H2.

The overwhelming majority of user interaction on Youinroll occurs in channels that are moderated by channel moderators, AutoMod, or both.

In (H1) 2020, 65% of live content viewed on Youinroll (measured in terms of minutes watched) occurred on channels that had Youinroll AutoMod feature actively monitoring chat for harmful messages; in H2 2020, this increased to 71%. We believe this substantial increase can largely be attributed to having AutoMod enabled by default for new channels in H2 2020 that did not have any assigned users as channel moderators. As shown in the chart above, the percentage of hours watched in channels that had at least one active moderator increased slightly, from 82% in H1 to 86% in H2 - we believe this high percentage shows that larger channels are very likely to have active moderators. Most importantly, throughout 2020, over 92% of live content viewed on Youinroll occurred in channels with chat that was moderated either by active moderators, or AutoMod, or both; and that coverage increased to over 95% in H2.

Proactive and Manual Removals of Chat Messages